Three Barriers to Achieving Zero Blind Spots with Automotive Analytics

BUSINESS INSIGHT

TRANSPORTATION | 5 MINUTE READ

At our automotive executive summit, leaders rated their efforts to implement effective analysis solutions as only 4.3/10. Learn why.

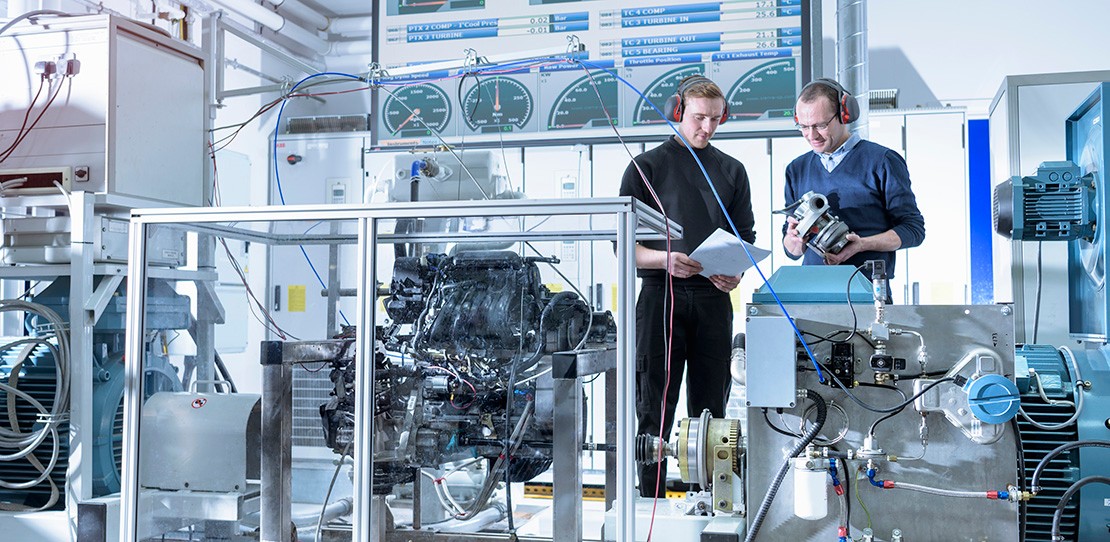

Automotive manufacturing demands continuous innovation and flawless delivery. Each car is made out of 30,000 parts, powered by 40 million lines of code originating from more than 20 countries worldwide, and constructed by thousands of different machines. It’s only by having zero blind spots can you protect your brand.

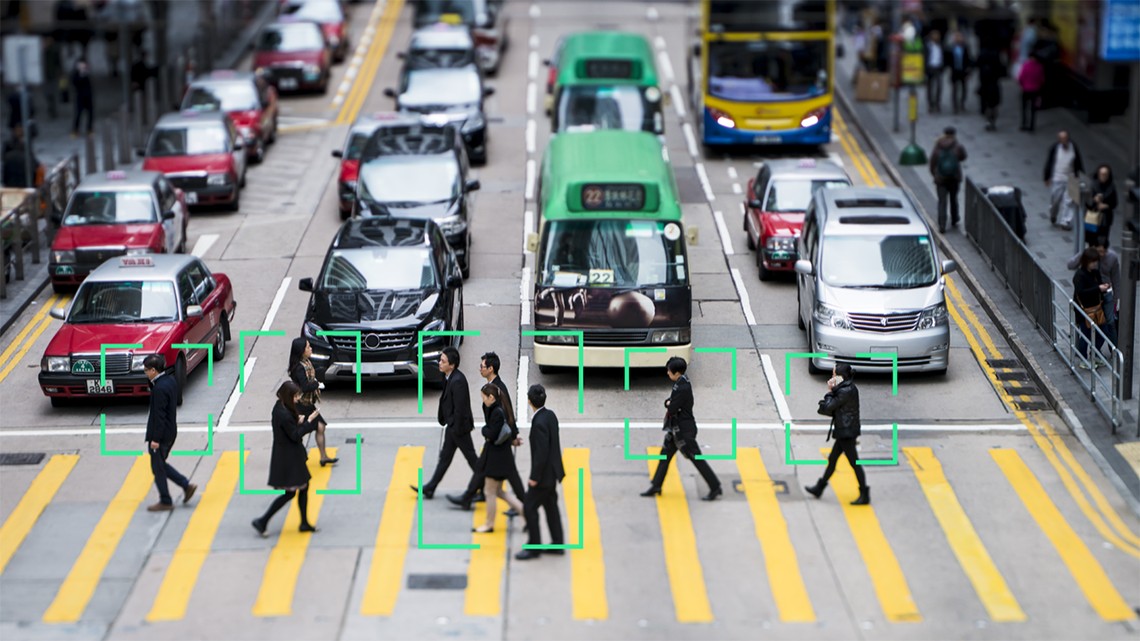

It’s likely you understand the importance of leveraging data to gain an advantage and are adopting technologies that rely on the latest cutting-edge innovations such as AI, 5G networks, and cloud computing. This is hard work—and it’s likely you and your team are doing a great job looking at the data within the scope of work for which your team is responsible. But perhaps you have difficulty connecting the dots and sharing data across OEMs, internal departments, and across manufacturing lines. How do we know? At NI, we’ve found that nearly 90 percent of all test data is unanalyzed, and the data is not utilized to its full potential—creating blind spots.

Despite impressive technologies and intent to improve processes, vehicles are still failing, leading to expensive recalls as a result of blind spots. Most recently is a Hyundai recall of 82,000 electric vehicles following reports of battery malfunctions. While the number of recalled units is considered small, the price per recalled vehicle is one of the most expensive in history at $11,000.

To achieve Vision Zero and eliminate blind spots in your data analytics, here are three considerations to take into consideration.

1. Siloed Data

One of the first challenges an organization has to decide to solve is how all data sources will be ingested into a centralized location. And believe us, when it comes to seeing how data is stored, we’ve seen it all, including:

- Data stored on deployed test stations

- Data stored on external memory devices (such as a USB stick from data collection at a remote, unconnected testing facility)

- Data stored locally on an engineer’s computer

- Data stored in an on-premises database

- Data stored in the cloud

Having data located in multiple locations causes the analytics to identify lagging indicators which leads to reactive responses and limits visibility into how the test assets actually performed.

2. Creating Actionable Insights

It’s no secret that the amount of data being collected is growing at a rapid pace, estimated to double every other year. But as they say, with large amounts of data comes the large responsibility to extract value from the data. Because it’s not just about acquiring the data; that’s kind of the easy part. You have to do something with it—the point isn’t just to generate it. Instead, you want to identify the right data for the decisions you’re trying to make and how to efficiently put it to work for you.

We’ve also often seen that the primary data that is collected, analyzed, and stored is largely metadata or the output after an analysis routine that bubbles up an overall “pass or fail” for the test. The data that actually shows the failure—what is referred to as parametric (or waveform) data—is often stored in a different location and can be hard to trace once a failed test is identified.

If you cannot make a connection between data sources, you’ll get stuck on the “what” is going wrong, not the more impactful “why” there is a failure for that analysis. To start creating actionable insights and getting to the “why,” here are a few questions to consider about your current process.

- Does the data you collect lead to actionable insights on a weekly basis?

- When was the last time you updated an analytics routine?

- How are insights shared with the appropriate team, and will they have access to the data to review? What if the issue in question is for a part supplied by another company?

- Is it costing you money and efficiency to do nothing with your data? Instead of hoping an engineer will one day look at the data, what processes can change how you transform your unused data into a competitive advantage?

In our experience, there is untapped potential in every single test and manufacturing department just waiting to be discovered.

3. Product Life-Cycle Analytics

Given that a car is composed of 30,000 parts, are you harnessing the full power of your test data across the entire product life cycle and discovering not just the defects quickly? Are you also able to discover defects before they become a problem? The speed and precision you could do this with a fully connected data system is incredible.

What does this look like in action? A Tier 1 automotive company was assembling a camera for the first time as part of the NPI process and noticed more failures than average. When looking at the data, the engineers were not sure if the failure was part of their process of combining the lens and circuit board into an assembly. They wondered if there was something causing the alignment to be off when gluing and curing the pieces together or if there were faulty slots in their burn-in testing equipment. By leveraging product life-cycle analytics, they were able to analyze each testing slot to determine if all slots were failing at approximately the same rate or if there were certain slots that had more failures of a particular type than average. Ultimately the analysis showed the issue was not with the assembly process but with faulty equipment. After eliminating the use of those slots, they were able to reach their entitlement yield in less than three months compared with the 18 months it had been taking.

We know you relentlessly pursue performance, quality, reliability, and operational efficiency to bring the best products to market first. That’s why NI’s breakthrough end-to-end product life-cycle analytics solutions connect your engineering and product data to ensure you have zero blind spots. Let’s accelerate the path to vision zero together.