Imminent Trade-Offs for Achieving Safe Autonomous Driving

EXPERT OPINION

TRANSPORTATION | 6 MINUTE READ

Autonomous driving vehicles will challenge automotive engineers to balance three critical trade-offs: cost, technology, and strategy.

AUTHOR: Jeff Phillips, NI Head of Automotive Marketing

- Autonomous driving will challenge the cost ratio for sensor redundancy to ensure overall safety.

- A software-defined test platform will be critical to keep pace with the evolution of processor architectures.

- The semiconductor and automotive industries are converging as requirements for autonomous driving are impacting microprocessor architectures.

According to the World Health Organization, every year more than 1.25 million lives are cut short because of traffic crashes, and these crashes cost governments approximately 3 percent of GDP. Though the potential impact of autonomous driving is pervasive, extending into personal, economic, and political domains, the potential life savings alone mean autonomous driving could be the most revolutionary invention of our time.

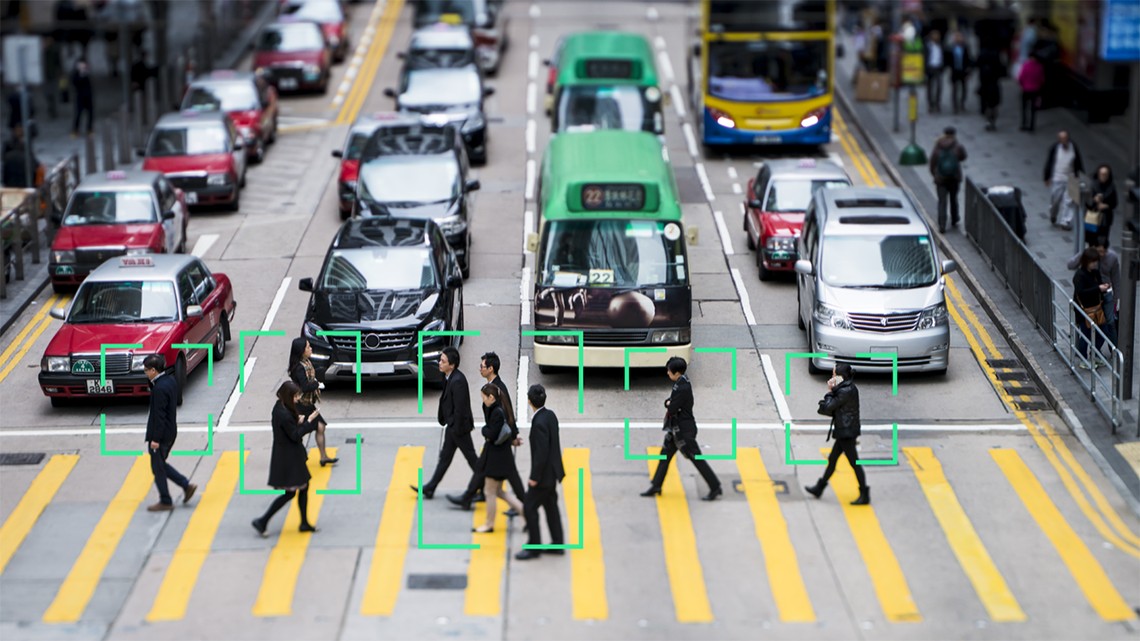

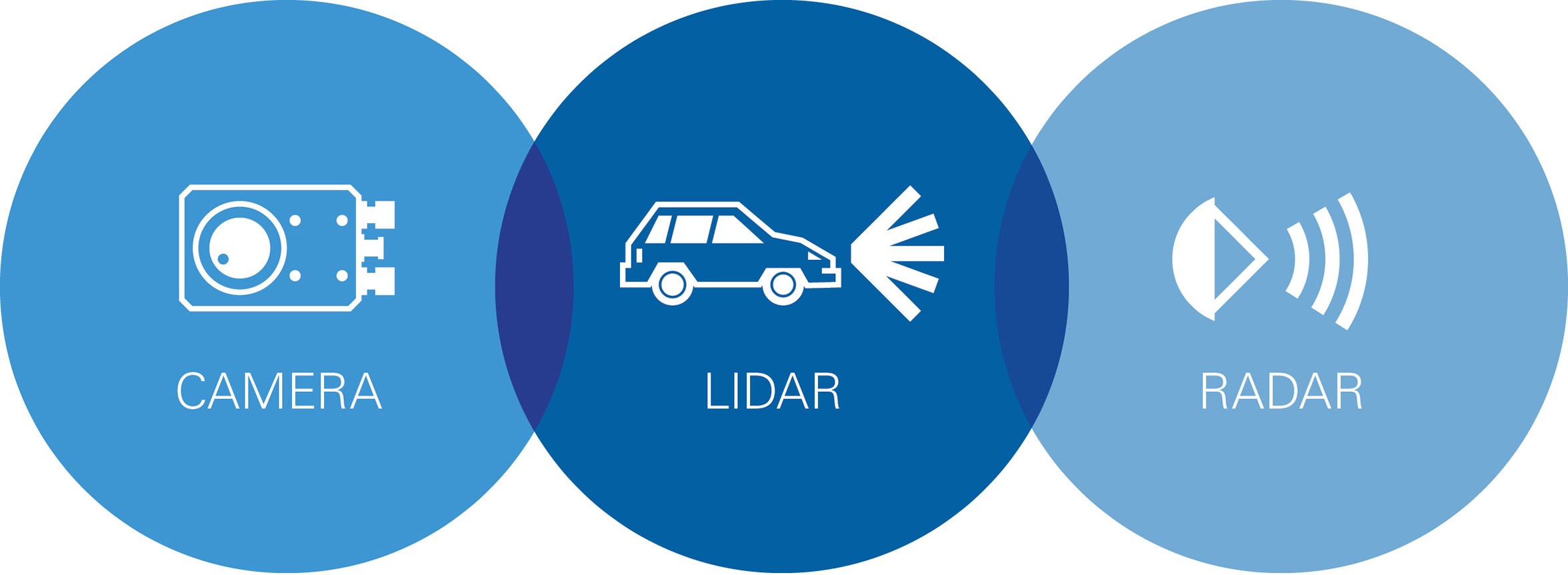

Advanced Driver Assistance Systems (ADAS) are a convergence of sensors, processors, and software to improve safety and ultimately deliver self-driving capability. Most of these systems today use a single sensor, such as radar or camera, and have already made measurable impact. According to a 2016 study by the IIHS, automatic-braking systems reduced rear-end collisions by approximately 40 percent, and collision warning systems cut them by 23 percent. Still, the NHTSA reports that 94 percent of serious car crashes are caused by human error. To move from driver-assist to Level 4 or 5 autonomy and take the driver from behind the wheel, the auto industry faces significantly more complicated challenges. For instance, sensor fusion—the combining of measurement data from many sensors to drive outcomes—is required, and it demands synchronization, high-power processing, and the continued evolution of the sensors themselves. For automotive manufacturers, this means finding the appropriate balance across three critical trade-offs: cost, technology, and strategy.

Cost: Redundant Versus Complementary Sensors

The standard for Level 3 autonomy says the driver does not need to be actively paying attention if the car stays under predefined circumstances. The 2019 Audi A8 will be the world’s first production car to offer Level 3 autonomy. It’s equipped with six cameras, five radar devices, one lidar device, and 12 ultrasonic sensors. Why so many? Simply put, they each have unique strengths and weaknesses. For example, a radar shows how fast an object is going but not what the object is. Sensor fusion is needed here because both data points are critical in anticipating the behavior of the object, and redundancy is necessary to overcome each sensor’s weaknesses.

Ultimately, the goal of processing sensor data is to create a fail-safe representation of the environment surrounding the car in a way that can be fed into decision-making algorithms and that can keep costs down so the final product is profitable. One of the most significant challenges in accomplishing this is choosing the right software. Consider three examples: tightly synchronizing measurements, maintaining data traceability, and testing the software against the infinite number of real-world scenarios. Each of these is uniquely challenging; autonomous driving will require all three, but at what cost?

Technology: Distributed Versus Centralized Architectures

ADAS processing capabilities are based on multiple isolated control units; however, sensor fusion is driving the popularity of a singular centralized processor. Consider the Audi A8. In the 2019 model, Audi combined the required sensors, function portfolio, electronic hardware, and software architecture into a single central system. This central driver assistance controller computes an entire model of the vehicle’s surroundings and activates all assistance systems. It has more processing power than all the systems in the previous model of the Audi A8 combined.

The primary concern with a centralized architecture is the cost of high-power processing, which is exacerbated by needing a secondary fusion controller somewhere else in the car as a safety-critical backup. Preferences will likely alternate between distributed and centralized architectural design over time as the controller and its processing capabilities evolve, which means a software-defined tester design will be critical in keeping up with that evolution.

[Helmut Matschi, member of the Executive Board, Interior Division, at Continental] said it all comes back to software engineering... With widespread use of high-performance computers in vehicles early next decade, development projects might direct as much as 80 percent of their budgets to software, he predicts.

[Helmut Matschi, member of the Executive Board, Interior Division, at Continental] said it all comes back to software engineering... With widespread use of high-performance computers in vehicles early next decade, development projects might direct as much as 80 percent of their budgets to software, he predicts.

Strategy: In-House Versus Off-the-Shelf Technology

To achieve Level 5 autonomy, the microprocessor for autonomous vehicles needs 2000X more processing capability than the current microprocessors on controllers; therefore, it is quickly becoming more expensive than RF components in mmWave radar sensor systems. History has shown that an increasingly expensive capability that’s in high demand draws the attention of leaders in adjacent markets, which drives competition among market incumbents.

As one data point, UBS estimates that the Chevrolet Bolt electric powertrain has 6X to 10X more semiconductor content than an equivalent internal combustion engine car. The semiconductor content will only continue to increase, and market adjacencies will provide invaluable off-the-shelf technology improvements. For instance, NVIDIA has adapted its Tegra platform, initially developed for consumer electronics, to target ADAS applications in automotive systems. Alternatively, DENSO has started designing and fabricating its own artificial intelligence microprocessor to reduce cost and energy consumption, and NSITEXE Inc., a subsidiary of DENSO, has plans to release a dataflow processor, a next-generation processor IP called DFP in 2022. The race is on.

Optimizing the Trade-Offs

Decisions on these trade-offs will have a tremendous impact on time to market and differentiating capabilities throughout the supply chain. The ability to quickly reconfigure testers will be critical in minimizing validation and production test costs and times, so flexibility through software will be key. In an interview excerpt published March 4, 2018, on bloomberg.com, Dr. James Kuffner, CEO of the Toyota Research Institute-Advanced Development, said, “We’re not just doubling down but quadrupling down in terms of the budget. We have nearly $4 billion USD to really have Toyota become a new mobility company that is world-class in software.” This sentiment is not uncommon in automotive. There’s no clear answer on these trade-offs yet, but, just like past industrial revolutions empowered people to afford new technologies through a higher productivity gain, increasing efficiency in software development will be integral to the autonomous driving revolution.

Redundant Versus Complementary Sensor Considerations