Enabling AI Research for 5G Networks with NI SDR

Overview

Wireless communications are everywhere today. They connect people, things, and systems. Next-generation networks must address growing data bandwidth needs and the increase in connected devices. They also must serve new applications such as autonomous driving and remote surgery, connect huge numbers of smart devices with maximum battery life, and enable reliable coverage for users in both dense urban and sparse rural areas.

Research on 5th generation networks, or 5G, has been active for the past several years, and NI has played a significant role in enabling that research. In 2016, Forbes Magazine recognized the importance of NI’s impact by publishing an article titled “5G Won’t Happen Without NI.”

Today, artificial intelligence (AI) adds new technologies to 5G research that can help address several challenges. This white paper examines the current opportunities to accelerate the future of 5G communications networks with the help of AI. It is not an exhaustive list nor a full description of the applications and technologies, but it explores the research topics that NI believes will improve the way we communicate soon.

Contents

- Enabling 5G PHY Research through Prototyping

- AI in Communications Systems Physical Layers

- AI for Telecom Carriers

- Conclusion

Enabling 5G PHY Research through Prototyping

NI is a key partner to 5G physical layer (PHY) researchers by providing an integrated software defined radio (SDR) products with a fully open software stack. Telecom vendors, network operators, and leading university research groups are using NI SDR products to test their ideas and prototypes with real-world signals in real-world scenarios.

NI’s open SDR solution combines multiple features to accelerate building real-time testbeds and real-world experimental setups. The commercial off-the-shelf (COTS) hardware and software components make it easy to build a complicated setup, so researchers can focus on the innovations and not the testbed implementation.

Massive MIMO record-breaking testbeds, mmWave channel sounder field experiments, new waveforms and coexistence studies, and many other projects have incorporated NI SDR products.

Connecting math and signal processing to real-world signals

One key feature of NI’s solution is the ability to apply the complicated mathematics of innovative signal processing to real-world signals in real time. This includes not only the most realistic models for noise, distortions, and reflections but also real-time operation that complements, adjusts for, and helps engineers modify current systems.

Real-time, real-world operation with a focus on wireless innovation featuring COTS hardware and open and flexible software is what makes the difference in time-critical 5G research.

Because the same hardware platform has been used for years in field trials and deployed in wireless network systems such as OpenBTS and Amarisoft LTE, wireless researchers can build on this proven expertise for their 5G research.

Connecting network research to real-world signals

The 3GPP has frozen the first definition of the 5G physical layer in its Release 15, and it has been implemented on SDR hardware. Numerous simple to very complex testbeds for higher layers are being set up to test and demonstrate innovative solutions in areas like network slicing and orchestration applied to real-world use cases.

AI is key to current 5G research

5G innovations are tightly intertwined with all the major IT trends that add performance improvements to data processing. These performance and flexibility gains rely heavily on software at all the layers of the communications stack. AI with neural networks and machine learning is one of the key topics under exploration in the network innovators community. Innovators believe AI incorporated in the physical layer, MAC layer, or higher software can help them find solutions for many subtasks in the 5G network design. This includes Massive MIMO channel estimation, mmWave beamforming and scheduling, traffic distribution across different media, power amplifier linearization and power efficiency, network slicing and orchestration, big data analysis from different nodes, and many other tasks ranging from the physical layer to the core network to the application layer.

The same AI ideas and tools can be used to solve different problems across a variety of applications and industries. This emphasizes the importance of thinking outside a single issue and beyond established boundaries.

NI’s cross-industry SDR technology is also well suited to work with AI to help solve these problems.

AI in Communications Systems Physical Layers

The accelerating complexity of wireless design has resulted in difficult trade-offs and large development expenses. Some of the complexities of RF system design are inherent in radio, such as hardware impairments and channel effects. Another source of complexity is the breadth of the degrees of freedom used by radios. Communications and RF parameters, such as antennas, channels, bands, beams, codes, and bandwidths, represent degrees of freedom that must be controlled by the radio in either static or dynamic modalities. Recent advances in semiconductor technology have made wider bandwidths of spectrum accessible with fewer parts and less power, but these require more computation if real-time processing is important. Furthermore, as wireless protocols grow more complex, spectrum environments become more contested and the baseband processing required becomes more specialized.

Taken together, the impairments, degrees of freedom, real-time requirements, and hostile environment represent an optimization problem that quickly becomes intractable. Fully optimizing RF systems with this level of complexity has never been practical. Instead, designers have relied on simplified closed-form models that don’t accurately capture real-world effects. They have fallen back on optimizing individual components because full end-to-end optimization is limited.

In the last few years, the advances in AI have been significant, especially in a class of machine-learning techniques known as deep learning (DL). Where human designers have toiled to hand-engineer solutions to difficult problems, DL provides a methodology that enables solutions directly trained on large sets of complex problem-specific data.

Digital predistortion with AI

Modern communication systems incorporate complex modulation schemes that lead to high peak to average power ratio (PAPR) coefficients. This changes the requirements for power amplifiers used at the receiver side and especially the transmitter side because the power amplifier nonlinearities are a much bigger issue when dealing with high PAPR. The modulated signals grow distorted (compressed) because of amplifier saturation, and the quality of the communications system degrades. Technologies such as digital predistortion (DPD) and envelope tracking (ET) are being widely developed to overcome the issue. The idea itself is very simple: distort the signal “in the opposite direction” at the amplifier’s input so it becomes close to ideal after compression. But this simple idea can be complicated by the dozens of DPD models and schemes that work for different amplifier designs, types of signals, and media.

AI algorithms help engineers choose the best way to apply the DPD when the model is not clearly defined or not available at all. One of the main advantages of artificial neural networks (ANNs) is their ability to extract, predict, and characterize nonlinearities from massive datasets (sometimes without knowing exactly how). Because ANNs can capture many kinds of relationships between a variety of time-varying inputs and outputs, such as complex nonlinear relationships or highly dynamic relationships, they are well suited to tackle nonlinearity problems at the PHY, including power amplifier nonlinearity tracking, predistortion, and impairment correction.

Applying AI to design end-to-end communications systems

The DL approach of AI is dramatically different compared with traditional radio communications system optimization. It adds a new layer to the cognitive radio approach. Traditionally, the transmitter and receiver are divided into several processing blocks, and each block is responsible for a specific subtask, for example, source coding, channel coding, modulation, and equalization. Each component can be individually analyzed and optimized, which has led to today’s efficient and stable systems. DL enables end-to-end learning, that is, training a model that jointly optimizes both ends of an information flow and treats everything in between as a unified system.

For example, a model can jointly learn an encoder and a decoder for a radio transmitter and receiver to optimize over the end-to-end system. The “end-to-end system” might also be something less broad, like the synchronization and reception chain for a specific protocol. This is often the usage pattern when integrating DL into existing systems where there is less flexibility in the processing flow.

A key advantage of end-to-end learning is that instead of attempting to optimize a system in a piecemeal fashion by individually tuning each component and then stitching them together, DL treats the entire system as an end-to-end function and holistically learns optimal solutions over the combined system.

Researchers at Cornell University have proposed a new approach to communications system design by redeveloping an entire communications system based on neural network DL libraries to optimize unsynchronized off-the-shelf SDRs. This approach is efficient in ever-changing circumstances that don’t offer a solid channel model. The researchers use an approximate channel model to train the network and then fine-tune the model during real-world signal operation with SDR hardware.

Military RF with AI offers insight

Commercial communications isn’t the only area to combine RF signals and AI. 5G researchers also need visibility into similar research for areas like military communications, signals intelligence, and radar design to learn from it and build on it whenever possible. In electronic warfare, for example, spectrum sensing and signal classification were some of the first radio applications to benefit dramatically from DL. Whereas previous automatic modulation classification (AMC) and spectrum monitoring approaches required labor-intensive efforts to hand-engineer feature extraction and selection that often took teams of engineers months to design and deploy, a DL-based system can train for new signal types in hours. Furthermore, performing signal detection and classification using a trained deep neural network takes a few milliseconds. A company named DeepSig has commercialized DL-based RF sensing technology in its OmniSIG Sensor Software product. The automated feature learning provided by DL approaches enables the OmniSIG sensor to recognize new signal types after being trained on just a few seconds’ worth of signal capture.

Hardware architectures for AI in radio

Typically, two types of COTS cognitive radio (CR) systems enable AI radio systems for defense:

- Compact, deployed systems in the field using AI to determine actionable intelligence in real time that leverage the typical CR architecture of an FPGA and general-purpose processor (GPP), sometimes with the addition of a compact graphics processing unit (GPU) module

- Modular, scalable, more compute-intensive systems typically consisting of CRs coupled to high-end servers with powerful GPUs that conduct offline processing

For systems with low size, weight, and power (SWaP), coupling the hardware-processing efficiency and low-latency performance of the FPGA with the programmability of a GPP makes a lot of sense. Though the FPGA may be harder to program, it is the key enabler to achieving low SWaP in real-time systems.

Larger, compute-intensive systems need a hardware architecture that scales and heterogeneously leverages best-in-class processors. These architectures typically comprise FPGAs for baseband processing, GPPs for control, and GPUs for AI processing. GPUs offer a nice blend of programming ease and the ability to process massive amounts of data. Though the long data pipelines of GPUs lead to higher transfer times, this has historically not been an issue because most of these systems are not ultra-low-latency systems.

AI and the technologies it enables have made great leaps in recent years. AI is poised to radically change military RF systems, applications, and concepts of operations (CONOPSs). It is now possible to train a custom modem optimized for an operational environment and specialized hardware in a matter of hours, forward-deploy it to low-SWaP embedded systems, and then repeat the process. DL offers the ability to learn models directly from data, which provides a new methodology that, in some ways, commoditizes physical-layer and signal-processing design while optimizing at levels previously impossible.

Massive MIMO with AI

As base stations serve more users, new approaches like Massive MIMO and beamforming come into play. They offer gains in efficiency by spatially adjusting signal transmitting and receiving to serve each user individually. Beamforming is a signal processing technology that lets base stations send targeted beams of data to users, which reduces interference and more efficiently uses the RF spectrum. One of the challenges in building these systems is figuring out how to schedule and steer the beams with the undetermined distribution of user terminals and their movement. Nokia, for example, has a system with 128 antennas that all work together to form 32 beams. It wants to schedule up to four beams in a specified amount of time. The number of possible ways to schedule four of 32 beams mathematically adds up to more than 30,000. There’s simply not enough processing power on a base station to quickly find the best schedule for that many combinations. A well-trained neural network can solve the problem more efficiently by predicting the best schedules on demand.

From a carrier perspective, AI technology can be used to identify the law of change in user distribution and forecast the distribution by analyzing and digging up historical user data. In addition, by learning the historical data, the correspondence between radio quality and optimal weights can be determined. Furthermore, Massive MIMO sites can automatically identify a different scenario such as a football game or a concert at a stadium and tweak the optimization of the weights for the different scenarios to obtain the best user coverage.

Different research groups are looking for ways to apply neural networks and DL to help Massive MIMO systems solve the optimization problem. For example, researchers from Lviv Polytechnic National University and Technical University of Košice proposed a deep adversarial reinforcement learning algorithm to train neural networks with the help of two more networks and provide nearly optimal antenna diagrams for many scenarios without solving mathematically complex optimization problems.

Similarly, AI can help with other predictions for tasks like indoor positioning and feedback uplink and downlink channel configuration from many users.

Spectrum sharing with AI

5G uses mmWave frequencies to take advantage of wide bandwidths unavailable in sub-6 GHz ranges. Though mmWave spectrum may not be scarce, it is not very reliable due to its propagation characteristics. At mmWave frequencies, severe channel variations occur due to blockage by cars, trees, solid building material, and even human body parts. As a result, a hybrid of spectrum landscapes featuring low and high frequencies is necessary to maintain seamless network connectivity and enable 5G verticals. This hybrid spectrum includes microwave and mmWave bands and different types of licensing.

AI allows for the smart scheduling and cooperation of different spectrum portions because learning and inferencing can be implemented based on user behaviors and network conditions. Specifically, neural networks can help multi-RAT base stations manage real-time resources. They also enable self-planning, self-organizing, and self-healing where a group of autonomous AI agents are distributed among different base stations.

DARPA Spectrum Collaboration Challenge

mmWave is one way to find available spectrum, but it presents challenges that make it impractical for many use cases. Effective utilization of sub-6 GHz spectrum is just as important.

The task of managing the spectrum becomes more complex as more independent devices compete for the spectrum. In unlicensed range applications for which no single operator can manage the devices, the situation is even more complex. A frequency-hopping technique widely used in Bluetooth is one way to self-manage spectrum usage, but it works only up to a point. It depends on the availability of unused spectrum, and if too many radios are trying to send signals, unused spectrum may not be available.

To tackle spectrum scarcity, the US Defense Advanced Research Projects Agency (DARPA) created the Spectrum Collaboration Challenge (SC2), a three-year open competition for which teams from around the world rethink the spectrum-management problem. Teams design new radios that use AI so they can learn how to share spectrum with their competitors and reach the ultimate goal of increasing overall data throughput. The teams work with dozens of radios in different scenarios trying to share a spectrum band simultaneously.

After two years of competition, for the first time, teams have designed autonomous radios that collectively share wireless spectrum to transmit far more data than possible by assigning exclusive frequencies to each radio. The main goal was for teams to develop AI-managed radio networks capable of completing more tasks collectively than if each radio used an exclusive frequency band.

Testing these radios in the real world was impractical because judges could not guarantee the same wireless conditions for each team that competed. Also, moving individual radios around to set up each scenario and each match was far too complicated and time consuming.

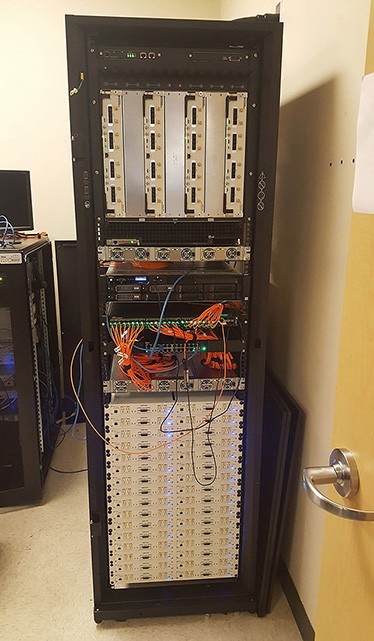

So, DARPA built Colosseum, the world’s largest RF emulation testbed. Housed in Laurel, Maryland, at the Johns Hopkins University Applied Physics Laboratory, Colosseum occupies 21 server racks, consumes 65 kW, and requires roughly the same amount of cooling as 10 large homes. It can emulate more than 65,000 unique interactions, such as text messages or video streams, among 128 radios at once. The 64 FPGAs handle the emulation by working together to perform more than 150 trillion floating-point operations (teraflops).

The RF part of Colosseum consists of NI USRP devices working as a giant multichannel SDR. NI’s SDR solution, with its native synchronization abilities and high-bandwidth data streaming and processing, is a vital part of the system enabling real-time operation with real-world signals. In short, the NI SDR solution used in Colosseum and SC2 helps connect AI and wireless communications researchers to solve the spectrum-sharing problem effectively.

For each match, radios are plugged in to “broadcast” RF signals straight into Colosseum. This testbed has enough computing power to calculate how those signals will behave according to a detailed mathematical model of a given environment. For example, Colosseum features emulated walls off which signals “bounce.” Signals are partly “absorbed” in the emulated rainstorms and ponds.

During the competition, the teams demonstrated that their AI-managed radios could share spectrum to collectively deliver more data than if the radios had used exclusive frequencies.

AI for Telecom Carriers

Complex digital signal processing algorithms are needed in many 5G applications. These algorithms can benefit from being constantly updated as more data sets about behavior are available, which is possible by adding AI into 5G systems.

Companies, for example ZTE, believe that AI can help telecom carriers optimize their investment, reduce costs, and improve overall efficiency. Some of the areas where AI can be used to improve 5G networks are precision 5G network planning, capacity expansion forecasting, coverage auto-optimizing, smart MIMO, dynamic cloud network resource scheduling, and 5G smart slicing. In smart network planning and construction, machine learning and AI algorithms can be used to analyze multidimensional data, especially cross-domain data. For example, 0-domain data, B-domain data, geographical information, engineering parameters, historical KPIs, and historical complaints in a region, if analyzed with AI algorithms, can help businesses reasonably forecast their growth, peak traffic, and resource utilization in a region.

Network slicing with AI

Network slicing is key because it serves a variety of 5G use cases. It ensures a good quality of experience (QoE) for industrial users in a virtual network if a panoramic data map of the slicing is constructed. Information about slice users, subscriptions, quality of service (QoS), performance, events, and logs can be collected in real time for multidimensional analysis and then sliced into a data cube. Based on the data cube, the AI brain can be used to analyze, forecast, and guarantee a healthy slicing. The experiences of different industrial users can be evaluated, analyzed, and optimized for building user portraits and guaranteeing healthy, safe, and efficient slice operation.

In addition, the data cube and AI brain can contribute throughout the full life cycle of slices, forming a closed loop. AI can also be used to generate the slicing strategy, resolve slice faults intelligently, and optimize performance automatically to help researchers efficiently schedule slice resources and enhance configurations.

Network simulation to enable AI trials

Many AI tasks are high in the communication stack and must be addressed with extensive computer simulations. Field trials are often unrealistic because of deployed networks that operate much like 5G. Even major equipment vendors and carrier operators can afford only a few of these trials in their pilot zones. NI SDR technology helps make the trials a reality, regardless of whether AI is incorporated already.

Samsung, Nokia, Intel, AT&T, and Verizon were all early adopters of NI SDR technology for research and prototyping, and these companies are well on their way toward a significant 5G future. Several other vendor-oriented solutions from W2BI, Viavi, and Amarisoft also contain NI SDRs.

The same software-defined approach helps researchers simulate 5G device operation as the standard evolves. They can combine real-world signals from realistic use cases to produce a complex simulation of the required network infrastructure. NI SDR technology connects IT experts in AI and researchers with extensive expertise in network architecture design and planning to real-world signals and helps them conduct real-world experiments and field trials.

Conclusion

5G is here, and we are seeing initial launches in different countries. It is far from being finalized, and some 5G technologies and use cases are still in their early phases. But 5G researchers can still solve many problems in the physical layer with AI’s software-defined processing, network architecture featuring a huge number of variables and parameters to optimize, and other elements of what we call 5G networks. AI is well positioned to empower researchers to meet their PHY challenges, while NI SDR technology helps them accelerate and simplify their research.