Prototyping a Real-Time Neural Receiver with USRP and OpenAirInterface

Overview

Embedding AI in RF/wireless is a promising technology option to improve the efficiency of mobile networks for power, spectrum, and performance. One of the biggest challenges for using AI technology in wireless communications is to ensure reliable and robust operation under practical deployment conditions. In this white paper, we will describe a USRP software defined radio-based, system-level benchmarking platform that can be used by researchers and engineers to evaluate and optimize new AI-enhanced wireless devices. We will show an example of how a neural receiver model is benchmarked against a traditional receiver in a real-time system integrated into the OpenAirInterface (OAI) end-to-end 5G/NR protocol stack.

Use of AI/ML in Wireless Communications

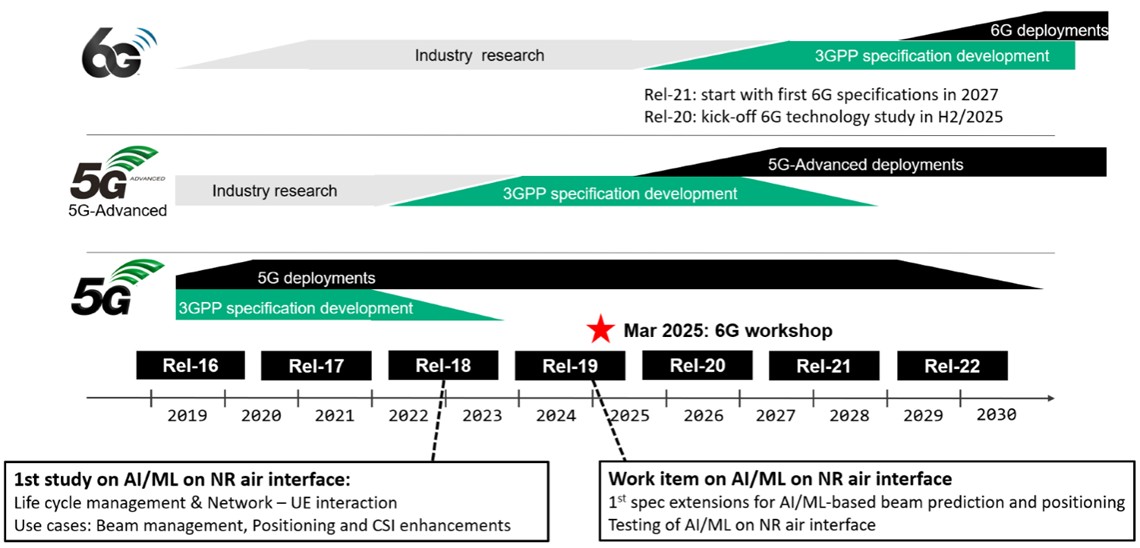

Figure 1. The Road from 6G Research to 6G Standard

In the race to add value to today’s wireless communications systems, artificial intelligence (AI)/machine learning (ML) is one of the most promising technology candidates for the different stakeholders of the wireless ecosystem. AI/ML-based algorithms can add value to wireless key performance indicators (KPIs) as well as to non-wireless KPIs.

For example, AI/ML can help mobile network operators (MNOs) to improve the efficiency of their networks. AI/ML-based algorithms are already applied at the network level; for example, AI/ML-based algorithms can improve load balancing between cells and reduce power consumption.

The use of AI/ML technology could also help at lower layers of wireless communication systems, such as the RF layer, physical (PHY) layer, or MAC layer. The tight timing constraints of these lower layers means it is more difficult to develop and deploy such AI/ML-based algorithms.

In today’s AI/ML research activities on RF/PHY/MAC layers of wireless communications systems, popular topics include the following areas:

- ML-based digital predistortion (DPD) with either lower power consumption or higher network coverage

- AI/ML-based channel estimation and symbol detection (neural receiver) with physical-layer performance requiring lower SNR values and, hence, leading to lower power consumption and less neighboring cell interference

- ML-based beam management with more reliable prediction—both in the time domain and the spatial domain, mitigating handset rotation effects while communicating over a mmWave link

Consortia like the 3GPP and O-RAN Alliance are actively defining the standard for how AI/ML will be applicable to cellular communications. The 3GPP conducted a study in the 2022 and 2023 Release-18 timeframe on how AI/ML could be adopted and implemented into the 5G NR air interface standards, as well as examining which extensions would be needed on the signaling stack1. This study was done for an initial set of three use cases related to AI/ML:

- Channel state information (CSI) enhancements regarding CSI prediction and CSI compression

- Beam prediction improvements in the time and the spatial domain

- Positioning accuracy improvements

In these three use cases, it is considered that AI/ML-based algorithms are running either on the user-equipment (UE) side or on the network side, or the algorithms operate as so-called dual-sided models (running partly on the UE side and partly on the network side).

The outcomes of this 3GPP Release-18 study regarding AI/ML framework investigations, the potential performance benefits of AI/ML-based algorithms over traditional algorithms, the potential specification impact, and the potential interoperability and testability aspects can all be found in the technical report that concluded this research phase.

During 2024 and 2025, the 3GPP is working towards Release-19, and the first preliminary extensions will be specified for physical and protocol layers towards a framework that enables the usage of AI/ML techniques on the NR air interface. These first specification steps help to enable AI/ML-based positioning accuracy improvements and AI/ML-based beam prediction and will be based on recommendations from the technical report established during the Release-18 study.

This research effort and the preliminary specifications are expected to continue over the next couple of 3GPP releases to expand AI/ML-based solutions towards more use cases. This will eventually lead to cellular communication systems that can be called “AI/ML native.”

In 2021, the O-RAN alliance had captured requirements and an AI/ML workflow description, mainly from the point of viewpoint of the MNOs. In this technical report, AI/ML lifecycle management including model design, model runtime access to data, and model deployment solutions are provided for different learning strategies, such as supervised learning, reinforcement learning, or federated learning. Furthermore, this report details AI/ML models used in O-RAN use cases that are aligned with the O-RAN use-case task group WG1. The O-RAN alliance identified use cases such as network energy saving or massive MIMO as benefitting from potential use of AI/ML techniques.

Last but not least, new tools like NVIDIA ’s Omniverse have the capability to emulate wireless scenarios that are closer to reality, compared to more traditional stochastic approaches, as these new tools leverage ray tracing techniques to emulate map-based scenarios. These new ways to emulate wireless scenarios are becoming commoditized, which increases the applicability of wireless communication AI/ML techniques. Real-world scenarios can be emulated, and their performance can be benchmarked using either traditional or AI/ML-based algorithms.

Neural Receiver Example

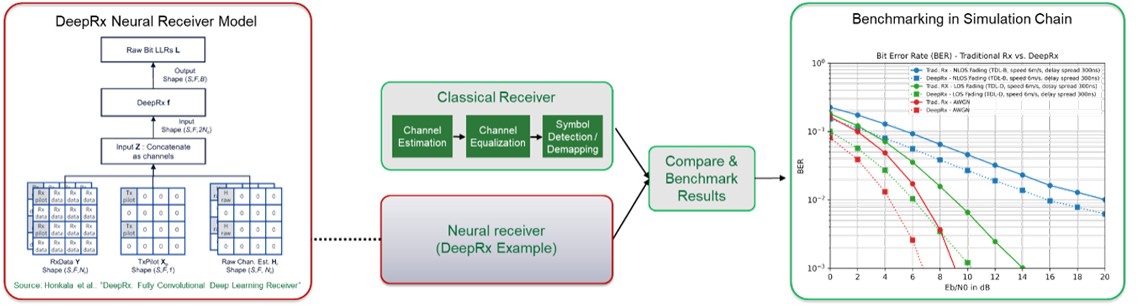

Figure 2. DeepRx Neural Receiver Example

To demonstrate how NI tools can support the AI/ML model workflow, we selected a publicly available model of a neural receiver for OFDM-based wireless communications systems, called DeepRx. The DeepRx Neural Receiver replaces the traditional channel estimation, interpolation, equalization and symbol detection blocks of a traditional OFDM system. It leverages learnings from image processing, interpreting the time-frequency grid of an OFDM system as a two-dimensional pixel array. For this study, we implemented the described DeepRx model using the TensorFlow framework that was also used for model training and validation. We leveraged the available NVIDIA Sionna link -level simulator where we integrated the DeepRx TensorFlow model to validate the performance in simulation. We also used the Sionna simulator to create synthetic training data that we saved in the SigMF data format.

After we trained the DeepRx model, we validated the performance in the Sionna link -level simulation against the traditional receiver. The results are shown on the right-hand side of Figure 2. The performance gain of the DeepRx neural receiver for the BER is clearly visible for different channel scenarios that have been selected here. The goal of this study was to validate these performance results from simulation on a real system prototype, described as follows.

OAI-Based Neural Receiver Benchmarking Platform

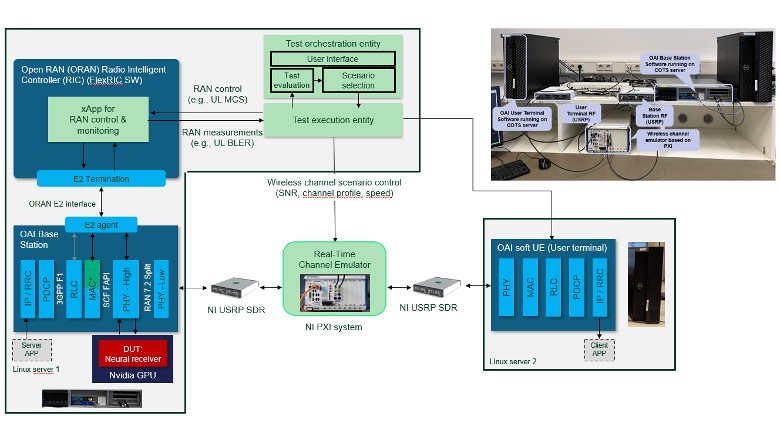

Figure 3. Neural Receiver Real-Time Benchmarking Setup

To validate the DeepRx neural receiver in a realistic prototyping environment, we leveraged the O-RAN-based open-source OAI real-time 5G protocol stack, together with the corresponding FlexRIC implementation of an O-RAN near-real-time radio intelligent controller (RIC). As a baseline, we used the OAI system configuration as described in the Research 6G Technologies using OpenAirInterface (OAI) Software solution brochure.

The OAI real-time protocol stack runs on commercial-off-the-shelf CPUs and connects to the USRP radio through an Ethernet interface. The USRP is used as the RF front end (RFFE). The key advantages of using OAI for such a system-level benchmarking platform are:

- It is open- source and cost- effective.

- It is modifiable and customizable.

- It operates in real time.

- It is 5G/NR compliant.

- It provides the core network, RAN, and UE components.

- It uses an O-RAN-based architecture.

The goal is to recreate the performance benchmarks between the neural and the traditional receiver, comparing it to simulation. In this system prototype, we wanted to compare the DeepRx neural receiver against a traditional uplink (UL) receiver. Specifically, we wanted to look at uplink block error rates (UL BLER) for an increasing signal-to-noise ratio (SNR). Usually, in a 3GPP compliant system the MAC scheduler would select the best uplink transport block configuration with a corresponding modulation and coding scheme (MCS) depending on the channel conditions. To fix the UL MCS and look at the UL BLER, we used the available PHY test mode in OAI that bypasses a conventional MAC scheduler and enables to select specific parameters like the UL MCS.

In this system prototype, we can control some of the PHY parameters from a Python-based test entity. This test entity interfaces to a specific xApp running on the FlexRIC controller that has a connection to the OAI gNB PHY test MAC through the O-RAN E2 interface. Furthermore, through the same procedure we can monitor the UL BLER measured at the OAI UL receiver within the test entity. The test entity also configures the channel parameters. We use a channel emulator based on an NI PXI system and an NI VST device to adjust the SNR and run fading channel models, for both mobile and non-mobile scenarios. This testbed enables complete system validation coverage, as described as follows:

- Range of selected modulation and coding schemes to be tested.

- Range of channel parameters to be tested such as SNR and fading channels.

- Switch between neural and traditional uplink receiver

- Monitor the BLER achieved by selected uplink receiver

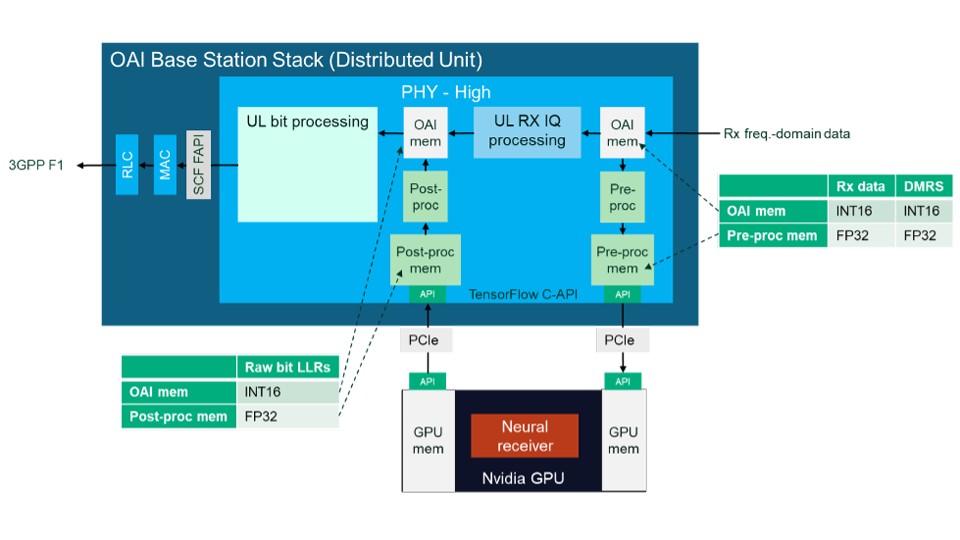

Figure 4. Neural Receiver Integration in OAI UL Receiver

To compare the traditional UL receiver as implemented as part of the OAI protocol stack against the neural receiver, we interfaced NVIDIA’s GPU to the CPU-based compute system where OAI is running. We tested the system with an NVIDIA A100 GPU as well as the RTX 4090 GPU, and both worked. We integrated the neural receiver as part of the real-time OAI gNB receiver so that we can easily switch between the traditional and neural receiver.

One specific feature of this system prototype is that the neural receiver model was implemented in TensorFlow running on an NVIDIA GPU. This is also used for model training and link-level simulation. We call the neural receiver model from the OAI code directly through the available TensorFlow RT C-API. This simplifies the integration of ML models into OAI significantly. The key advantage of this architecture is that we do not need to implement the neural receiver model in another environment, and therefore it enables a seamless workflow from training to simulation to real-time deployment. Only a minor conversion was required, from the TensorFlow simulation model to the TensorFlow RT model. With that procedure, a faster iterative improvement of the ML model was achieved.

Initial Benchmarking Results

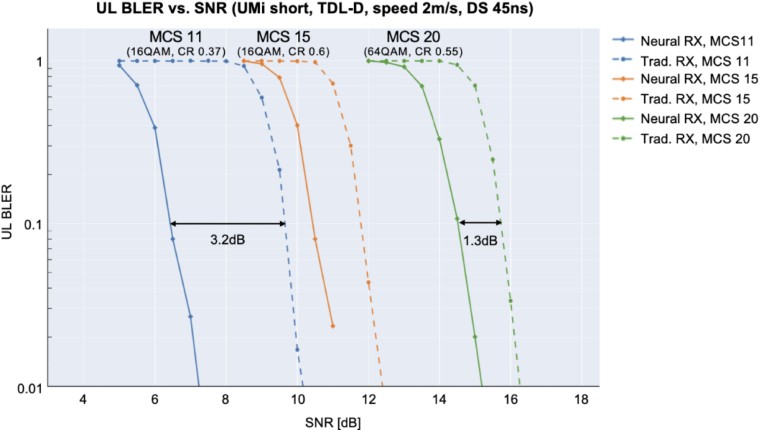

Figure 5. Initial Neural Receiver Benchmarking Results

With the described end-to-end system-level benchmarking platform, a realistic comparison between the neural receiver and a traditional receiver is possible. Initial results are shown in Figure 5 where we used the following system configuration:

- Single 5G NR link between one gNB and one UE

- Uplink/downlink TDD

- 40 MHz channel bandwidth with subcarrier spacing (SCS of 30 KHz)

- Allocated uplink bandwidth of six PRBs (2.16 MHz)

After the common UE attach procedure, the UE will send transport blocks for the selected modulation and coding scheme. For this test, we used MCS-11 (16-QAM, Code Rate 0.37), MCS-15 (16-QAM, Code Rate 0.6), and MCS-20 (64-QAM, Code Rate 0.55). We show the uplink block error rate that we calculate within the OAI gNB receiver based on successful transport block transmission (CRC check). Each block error rate point is the average of 300 transport block transmissions. We configured the channel emulator to change the SNR between 5 dB and 18 dB in steps of 0.5 dB. We used an urban micro fading channel profile with a low velocity of 2 m/s and a delay spread of 45 ns.

All test parameters can be pre-configured in a test scenario description file so that the test entity can run through all parameters and generate a graph as in Figure 5. One observation is that the gain of the neural receiver compared to the traditional receiver vanishes for larger MCS. This merits deeper investigation to check whether the neural receiver model shows any unexpected behavior compared to the simulation.

One specific trade-off implemented for this initial real-time benchmarking system was to use a small uplink transmission bandwidth. This was done to ensure the real-time performance of the neural receiver model running on the GPU. Although we reduced the complexity of the neural receiver model by a factor of about 15 (700 k to 47 k parameters), it was not possible to run large channel bandwidths due to the tight latency constraints of a 3GPP system, which is in the order of 500 μs (the slot duration for a 30 KkHz SCS).

The low complexity neural receiver model was more difficult to train for a broader set of wireless transmission scenarios. This demonstrates that the described AI/ML prototyping and benchmarking platform can address the ML model complexity versus performance trade-off. It also shows the increased importance of comprehensive testing to ensure those low complexity models work robustly, and reliably, and do not show any unexpected or unwanted behavior.

Summary and Conclusion

In this white paper, we described how the NI USRP hardware can be used for real-time AI/ML model validation in a realistic end-to-end system. Although we used the neural receiver as an example, the general workflow and methodology from design to test to a real-world deployment can also be applied to other AI/ML wireless communications. The implementation of the AI/ML reference architecture will help researchers and engineers with these tasks and challenges:

- Work from a trained AI/ML model in simulation to an initial system-level prototype

- Validate and benchmark the AI/ML model in a real-world, system -level test environment

- Explore how to develop low complexity yet robust ML models for real-time operation

- Investigate the real time versus complexity versus performance trade-off

- Generate synchronized data sets from real-time systems

- Train and test for a broad set of scenarios and configurations

- Automatically execute pre-selected test sequences through test entity

- Emulate wireless scenarios using statistical channel models including mobility

- OTA validation in end-to-end testbed environments

- Generate synchronized data sets from real-time system

These steps will develop more robust and reliable AI/ML models for realistic deployment conditions and help us understand the areas that AI can lead to real improvements compared to the current networks. Ultimately, it will lead to more trust and utility in AI-enhanced wireless communications systems by validating key use cases and by discovering possible unexpected and unwanted behavior.

References

13GPP Revised study item description RP-221348: Study on Artificial Intelligence (AI)/Machine Learning (ML) for NR air interface, June 2022, online available: https://www.3gpp.org/ftp/tsg_ran/TSG_RAN/TSGR_96/Docs/RP-221348.zip

23GPP Technical Report 38.843: Study on Artificial Intelligence (AI)/Machine Learning (ML) for NR air interface, V18.0.0, Dec 2023, online available: https://www.3gpp.org/ftp/Specs/archive/38_series/38.843/38843-i00.zip

3O-RAN alliance technical report O-RAN.WG2.AIML: AI/ML workflow description and requirements, v01.03, July 2021, online available under https://specifications.o-ran.org/specifications

4NI Data Recording API, https://www.ni.com/en/solutions/electronics/5g-6g-wireless-research-prototyping/empower-ai-ml-research-6g-networks-rf-data-recording.html

5Signal Metadata Format (SigMF), https://github.com/sigmf/SigMF

6Honkala et al, DeepRx: Fully Convolutional Deep Learning Receiver, IEEE Transactions on Wireless Communications, Vol. 20, No. 6, June 2021

7NVIDIA Sionna: An Open-Source Library for 6G Physical-Layer Research, https://developer.NVIDIA.com/sionna

8OpenAirInterface 5G RAN Project, https://openairinterface.org/oai-5g-ran-project