How Self-Driving Cars Work to Drive Transportation Forward

BUSINESS INSIGHT

TRANSPORTATION | 8 MINUTE READ

Autonomous vehicle technology is being tested now but there are obstacles to overcome. Learn about self-driving cars & NI’s role in testing & validation.

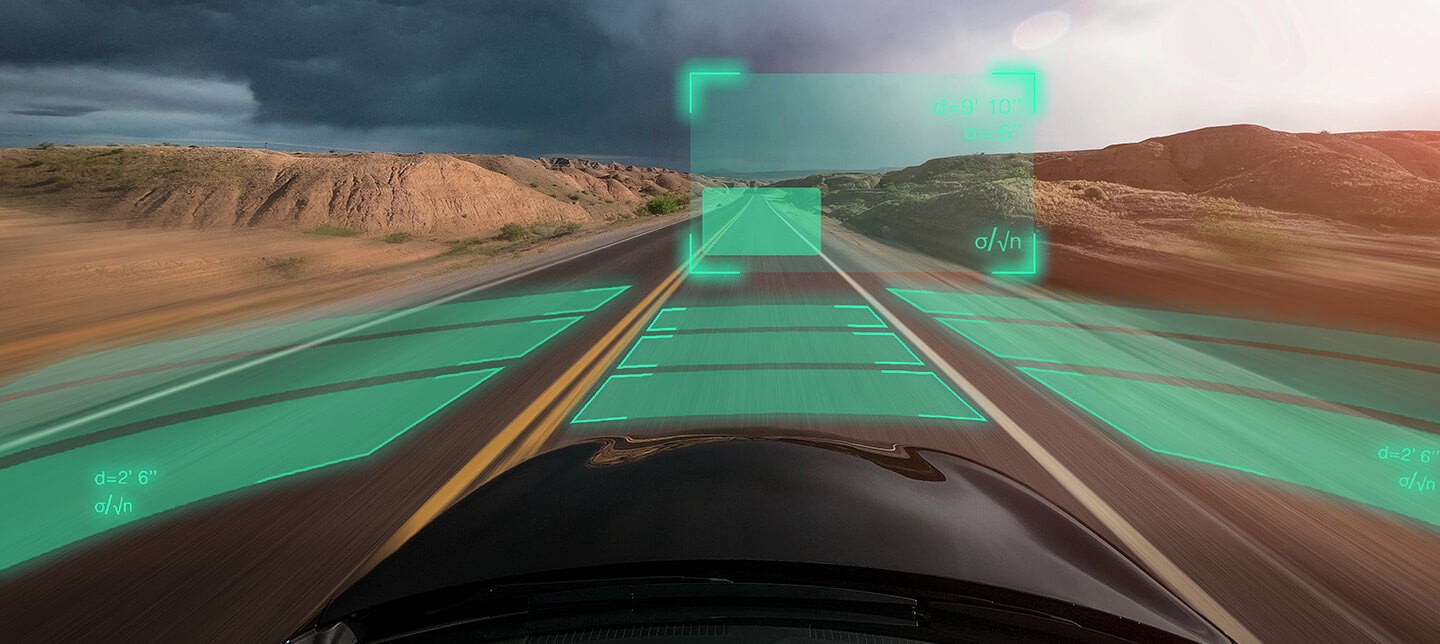

Self-driving cars use sensors and cameras to collect data on the world around them, then send this information to onboard computers that use powerful machine learning algorithms to process it and instruct automotive systems to respond accordingly.

The appeal of autonomous vehicles is easy to understand: a car or truck that can safely drive from point A to point B without human intervention frees people up to read a book, take a nap, or just enjoy the scenery.

The reality of self-driving cars is more complicated, as automakers are inclined to move cautiously to ensure vehicles are safe and meet regulatory requirements. While no fully autonomous vehicles are on the market today, many companies are testing them at tracks and in the real world.

What is Autonomous Driving?

Autonomous driving refers to vehicles navigating from one location to another without human input. A fully self-driving car or truck not only operates without a driver but can also efficiently manage control through a combination of advanced sensors, sophisticated computers, and seamless connectivity with other road users and the surrounding environment, including infrastructure. The integration of artificial intelligence (AI) also plays a pivotal role in helping these vehicles make informed decisions when encountering both routine and unexpected road conditions and obstacles.

Safety stands as the non-negotiable bedrock of autonomous driving innovation. Automakers must ensure that self-driving vehicles adhere to safety standards and regulations through rigorous testing to ultimately usher in a societal shift toward widespread consumer trust and adoption of driverless vehicles. NI is doing its part by providing the hardware and software solutions needed to validate the systems that will drive autonomous vehicles forward.

Self-Driving Car Technology

Autonomous vehicles rely on technologies including automotive LiDAR, radar, cameras, powerful computers, artificial intelligence software and V2X connectivity to operate safely. Here’s a short explanation of how these components work together to make autonomous driving possible.

Automotive LiDAR

Short for Light Detection and Ranging, LiDAR uses lasers to identify and judge the distances to objects. An onboard computer creates a map of the scene (point cloud), enabling the car to “see” other vehicles, people, animals, and obstacles to drive safely.

Radar

Modern vehicles can use radar for many features, including blind-spot detection and automatic parking assist. A sensor transmits and receives radio waves to sense objects. If an obstacle is nearby, it can stop or take evasive action to avoid a collision.

Cameras

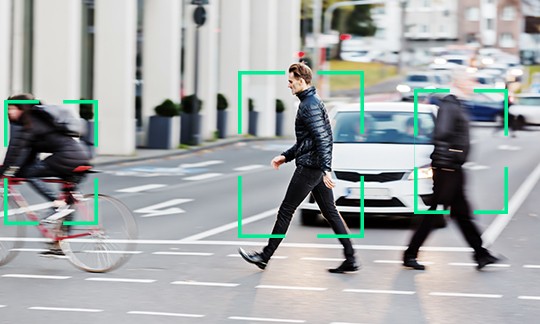

Visual-light cameras see the world in much the same way as humans, so they’re often used in autonomous vehicles to identify traffic signs, lane lines, and other markings that would be difficult or impossible for LiDAR or radar to capture. Cameras are crucial when it comes to classifying objects into categories like cars, trucks, pedestrians, or bicyclists.

Electronic Control Units (ECUs)

ECUs are small computers distributed throughout a vehicle, each supporting one or more systems. They process and respond to data from sensors connected to components like the engine, transmission, and brakes. ADAS and autonomous driving features rely on the computing power of ECUs for safe and effective operation.

Onboard High-Performance Computers (HPCs)

Automakers have found that relying on an ever-expanding number of ECUs can cause issues like latency and a simple lack of physical space in which to house them. Autonomous driving requires high-performance computers that can collect, process, and respond to sensor data quickly. HPCs can process information from multiple ECUs and take the necessary actions, lowering the burden on individual control units. HPCs in self-driving vehicles must be able to make decisions at the same speed—or faster—than humans.

Artificial Intelligence

AI and machine learning algorithms are essential to interpreting sensor data for the safe operation of self-driving cars. Tasks that are simple for humans, such as differentiating between a scrap of rubber in the road or a small animal, require programming and continual training for machines. Unexpected situations like a ball bouncing across your path (and anticipating who might chase after the ball) or a traffic accident blocking the way must be dealt with carefully as a mistake on the part of the computer could lead to injury or death.

V2X Connectivity

V2X, or Vehicle-to-Everything, enables automobiles to exchange real-time data with other vehicles, infrastructure, pedestrians, and the environment around them. This constant information flow enhances situational awareness, allowing cars to anticipate and react to potential hazards beyond their line of sight. Whether optimizing speed for green lights or sharing sudden braking actions with nearby cars, V2X serves as the digital nerve center, fostering safer, more efficient, and interconnected transportation systems.

Are There Any Self-Driving Cars Today?

There are no self-driving cars in commercial production as of 2024. According to Engadget, Mercedes announced it received Level 3 certification (occasional human intervention when driving) from the SAE, the first auto manufacturer to achieve this feat. These features are not yet on the road. However, many modern vehicles are equipped with Advanced Driver Assistance Systems (ADAS) that offer some level of automation. Common ADAS features include forward collision monitoring, automatic braking, blind-spot monitoring, adaptive cruise control, and lane departure warning systems. In the case of automatic braking the vehicle can apply the brakes itself when it determines a collision is likely.

Vehicle Automation Levels

SAE International, formerly the Society of Automotive Engineers, has created a classification system that sorts autonomous driving capabilities into six levels, SAE Level 0™ to SAE Level 5™.

Levels 0 to 2 encompass ADAS systems, where a human is still driving the vehicle. Levels 3 to 5 refer to what are commonly thought of as self-driving abilities. Level 3 autonomy may require occasional driver intervention upon the vehicle’s request, but levels 4 and 5 allow for fully autonomous driving.

Why Don’t We Have Self-Driving Cars?

Here are some of the challenges standing in the way of self-driving car production for the mass market:

- Unlimited scenarios and limitations of AI—We’d likely be further along with self-driving cars if life was more like a test track. Driving down an open road is a relatively simple task, but even a short trip often presents humans with unexpected challenges: an emergency vehicle approaching in the oncoming lane, a green light at a flooded intersection, or a funeral procession running a red light. There are infinite scenarios that can be encountered and need to be handled safely, while still getting from point A to B. Humans are generally good at evaluating edge cases and to a certain extent, in dealing with unknown unknowns. But machines aren’t there yet. Many scenarios need to be understood and safely handled by the on-board computer before full autonomy is possible.

- High costs—Self-driving vehicles require new technology, and that adds to the cost of the car or truck. Sensors, computers, and software must reach a certain price point before auto manufacturers consider them practical to include in their models. On the flip side, this enables new and evolving business models for shared mobility like robotaxis and ride sharing services, which can help to amortize the cost.

- Traffic laws—Vehicles driving without human input will need to observe traffic laws, from respecting traffic signs to safely changing lanes and properly yielding to stopped school buses unloading children. What’s more, there are subtle variations in traffic laws from state to state (not to mention internationally). Ceding control to the vehicle will require this data to be readily available to the car or truck so it can follow the laws, even as it drives between places with different rules and regulations.

- Data security concerns—Hacking an email account or social media profile can be very damaging; it doesn’t take much imagination to understand the risks of a compromised smart vehicle. Whether a bad actor is looking to steal a car, cause accidents, or access personal data, autonomous systems must be hardened against threats with robust automotive cybersecurity software.

- Consumer skepticism—A January 2023 AAA survey found that 68% of respondents were afraid of self-driving vehicles. That was an increase of 13% since the previous year, suggesting automakers have a challenge ahead in making the technology attractive to a large audience.

NI’s Role in Autonomous Driving Testing & Validation

When it comes to handing full control from human drivers to a car or truck, failure truly isn’t an option. Autonomous driving test and validation, including a focus on scenario simulation, is necessary to bring self-driving vehicles to market. NI’s hardware-in-the-loop (HIL) testing is used for evaluating LiDAR, radar, cameras, and associated vehicle sensors and electronics. Work is already well underway with companies like Jaguar Land Rover, which has partnered with NI to address myriad challenges.

Since autonomous driving sits at the intersection of automotive design and technology, success often means collaboration with many companies. Self-driving or ADAS capabilities require a constellation of semiconductors to work—more than needed in traditional cars and trucks. This has led microchip suppliers, OEMs, and Tier 1 partners to rethink semiconductor supply chains and test frameworks to ensure their efforts are scalable and effective.

As we advance into the era of electrification and heightened ADAS/AD features, the role of semiconductors in reshaping the automotive landscape becomes increasingly pronounced, necessitating ongoing innovation and cooperation among multiple stakeholders to meet evolving business, regulatory, safety, and customer expectations. This collaboration will ultimately hasten the day when humans can take their eyes off the road and enjoy a safe drive across town or from one end of the country to the other in their autonomous vehicle.