How a Modern Lab Approach Optimizes Post-Silicon Validation

Overview

With increasing device complexity and time-to-market pressures, semiconductor companies are looking for better tools and practices to maximize the efficiency of their post-silicon validation processes. This white paper describes some of the modern lab approaches that they are adopting.

Contents

- Post-Silicon Lab Validation in the NPD Process

- Innovations in Lab Validation to Ensure Quality While Reducing Time to Market

- First Silicon Bring-up, Learning, and Debugging

- Automation Using Standardized and Scalable Infrastructure

- Design and Implementation of Abstraction Layers

- Hardware

- Specifications Tracking

- Data Management and Reporting

- Strengthening Your Validation Community

- Collaboration with Other Disciplines

- Collaboration across Product Lines

- Data Mining and Leveraging Machine Learning (ML) and Artificial Intelligence (AI)

- Modernize Your Lab with Help from NI + Soliton

- Taking the Next Step

Post-Silicon Lab Validation in the NPD Process

During post-silicon validation, engineers complete the following:

- Characterize (fully quantify) the true parametric performance of the design

- Ensure the design fully functions per specifications and works perfectly in the customer’s system/application (if known)

- Measure the design margin to determine the potential reuse of the design or its IP for future opportunities

- Push the design to its breaking point to truly understand its robustness

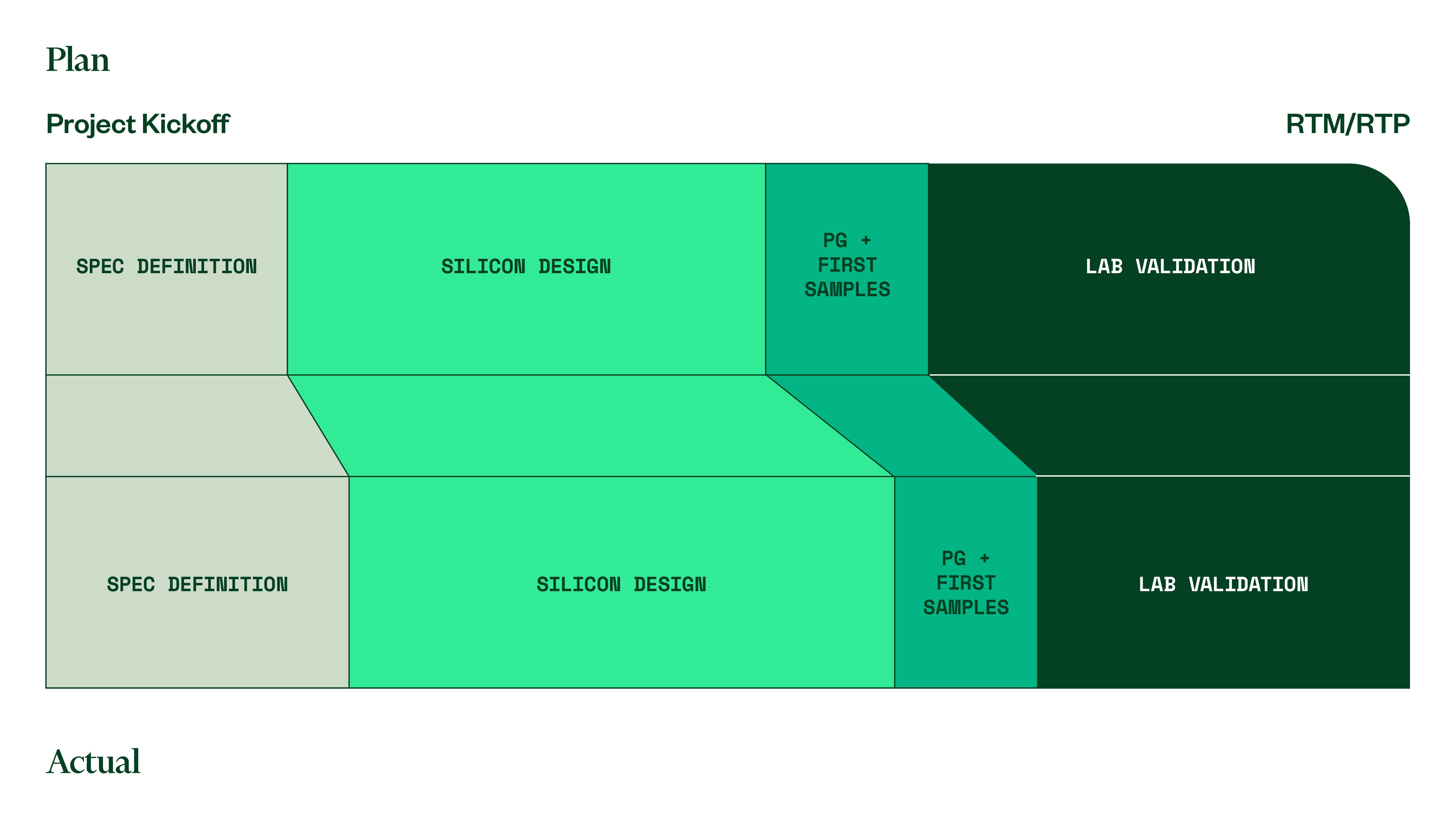

Post-silicon lab validation accounts for a sizable portion (sometimes 50% to 60%) of the total engineering effort involved in new product development (NPD). It’s considered the last chance to find design bugs or weaknesses before the product reaches the customer. But the time allocated for validation is often compressed because of delays in upstream processes like design or fabrication.

Figure 1. As the Last Step Before Release to Manufacturing/Production (RTM/RTP), Lab Validation Schedules are Compressed

Innovations in Lab Validation to Ensure Quality While Reducing Time to Market

Significant time and effort are wasted when engineers across different teams re-create software that they could have reused had it been standardized. Standardization and innovation help engineers not only overcome the continuous schedule challenges in post-silicon lab validation but also increase test coverage and ensure the quality of the final product. Given the right software infrastructure for lab validation, engineers can focus on critical validation tasks instead of developing and debugging software. Effective standardization solutions can automate more than 80% of repetitive tasks to save valuable time for the engineers in the lab. Moreover, standardization solutions built on open platforms are future-proof because they provide multivendor hardware support and, where possible, multilanguage software support. Additionally, standardization solutions offer the flexibility engineers need to perform the variety of measurements to validate the final product’s design.

The following standardization processes and innovations help engineers focus on their core validation tasks and maximize their output.

First Silicon Bring-up, Learning, and Debugging

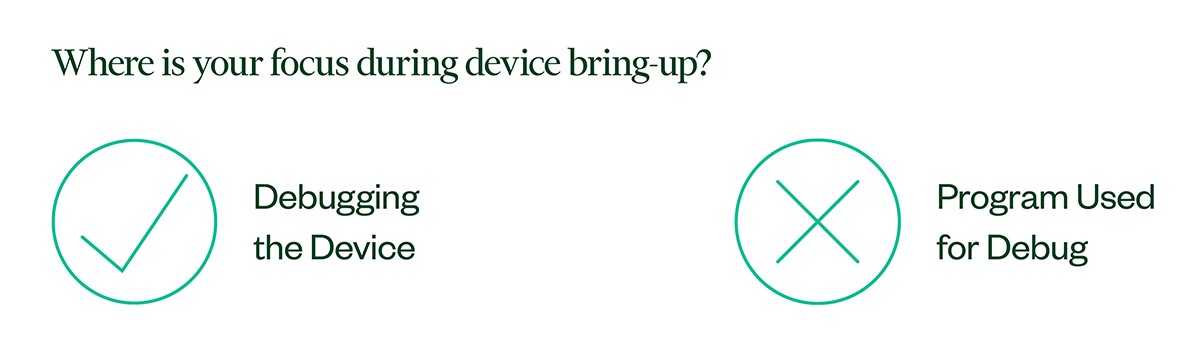

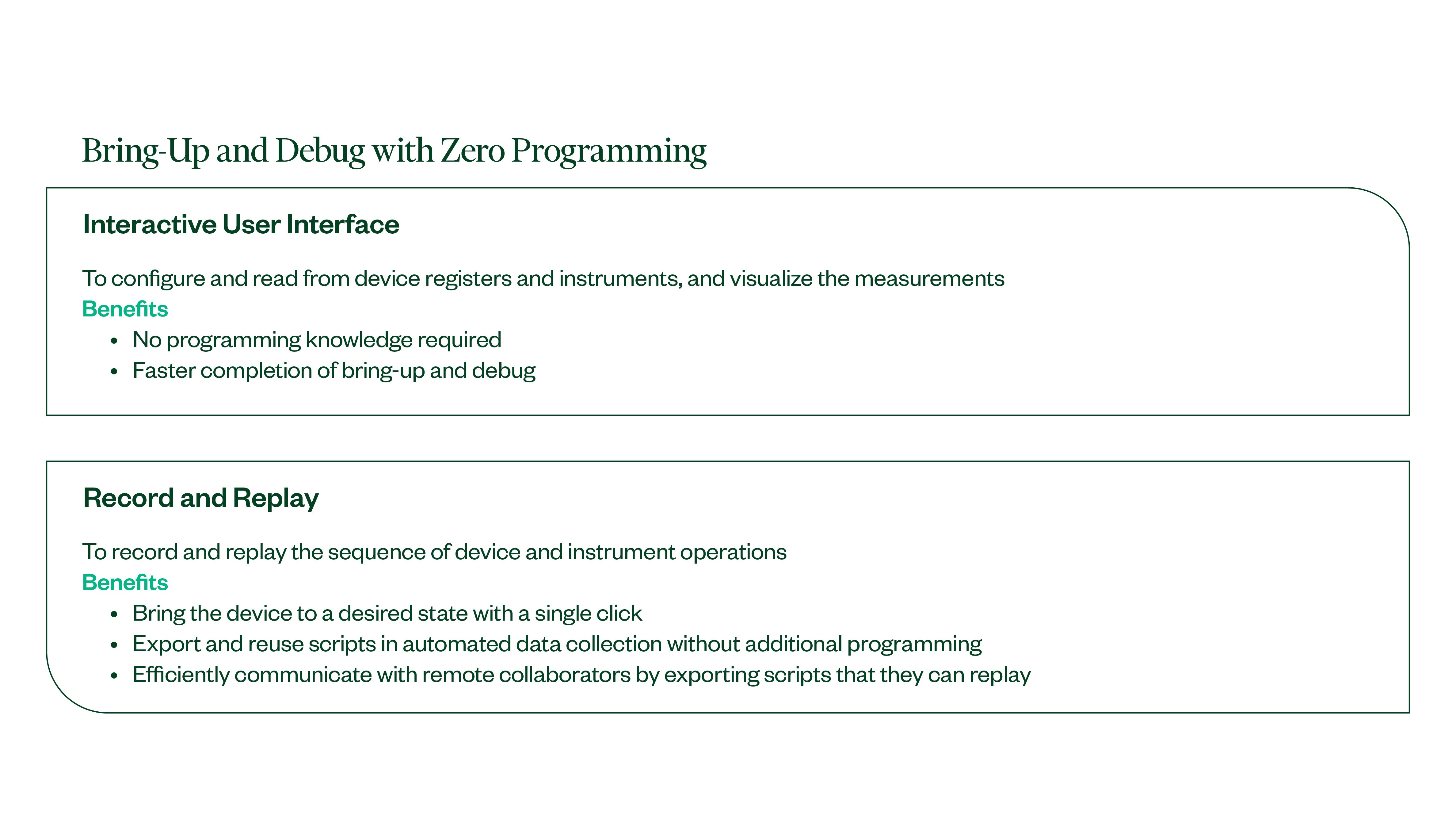

Bring-up involves powering up a new chip, establishing communication, and verifying at a high level if all the major elements of the chip are functioning. It mostly focuses on the control of multiple instruments and sources as well as much of the register communication. To verify if the device is working as expected, engineers need to visualize and analyze the measured data. With an effective bring-up tool, engineers can perform all these activities without having to write any code. Furthermore, when engineers encounter unexpected data results, they can run a variety of experiments to debug the device. This tool should enable engineers to perform all these experiments efficiently without sacrificing versatility.

Figure 2. With Compressed Timelines, where is your Focus During Device Bring-Up?

Figure 3. Features that Enable Bring-Up and Debugging with Zero Programming

Automation Using Standardized and Scalable Infrastructure

After bring-up and debugging, engineers execute the automation code to collect data across the full range of inputs and environmental conditions. This process is called process-voltage-temperature (PVT) characterization. The PVT automation code involves sequencing many individual actions (steps) in the correct order. A single measurement might include steps such as the following:

- Bringing the environmental condition to the required state

- Configuring the sources

- Setting the device into the specified mode by writing to its registers

- Turning on the sources

- Measuring the output of the device by reading the data from the instruments

Engineers must repeat each measurement by sweeping all the relevant control/input parameters included in the product specifications. This entire set of measurements has to be repeated for multiple devices to characterize the process variations. Engineers then must inspect the measurement data collected from all these sweeps for anomalies, identify their root causes, and correct them. The final set of good data is analyzed statistically to derive the product’s specifications, which are included in the data sheet.

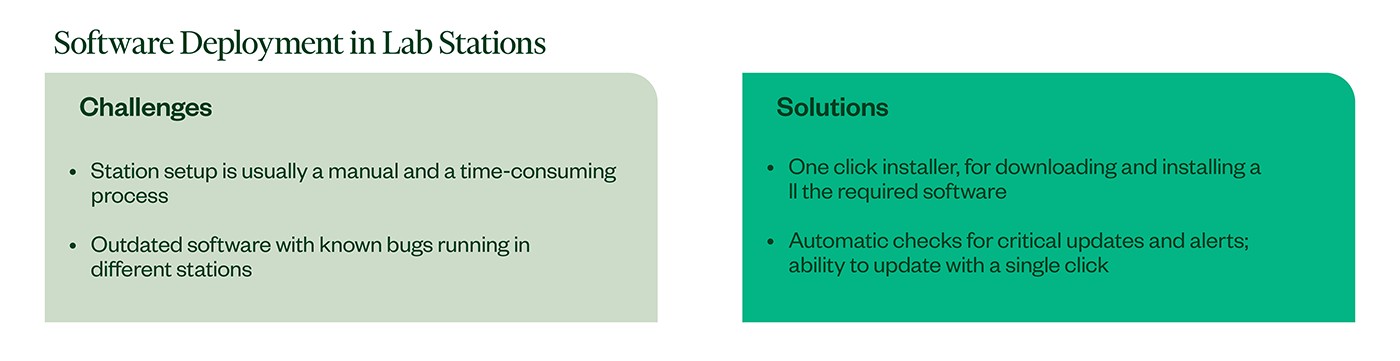

This elaborate process must be automated as much as possible to reduce time and effort. By standardizing on an open and scalable automated infrastructure, engineers in the lab can focus on high-value validation tasks and minimize the time they spend on software development and debugging.

If the instrument references are shared between the debugging tools and the automation environment, then the automation engineer can move between the two environments in a seamless and efficient manner during automation code development. Data collection can last for many hours or even days. If the data does not look right at any time, engineers must pause stop the process to examine and fix the debugging tool and then resume the process without missing a beat.

Figure 4. Challenges and Solutions for Software Deployment in Lab Stations

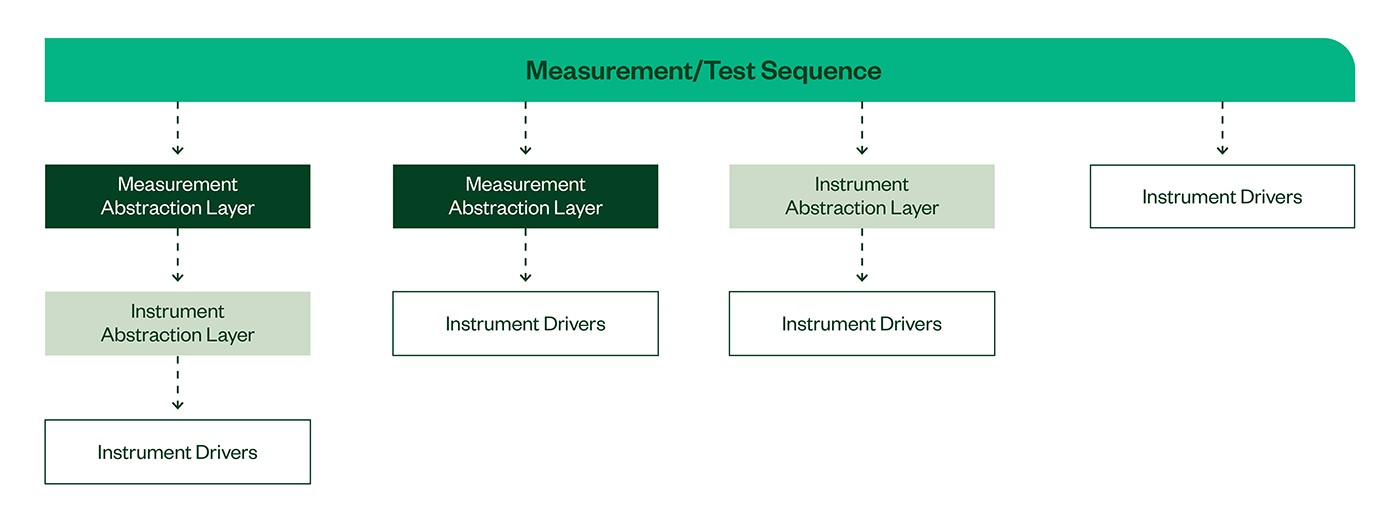

Design and Implementation of Abstraction Layers

Choosing the design of the abstraction layers in the validation software is one of the most important steps of the process. It determines the effort and time involved in accommodating the typical modifications that must be completed during validation. When abstraction layers are properly implemented, the most common modifications can be made by changing configurations rather than rewriting the code. Abstraction layers also increase the amount of software that can be reused across product lines. Typical abstraction layers include instrument, device communication, measurement, and test parameter.

When designing the abstraction layers, engineers must consider not just the cost of initial development but also the cost of maintaining the assets and the ease of debugging while keeping access open to the lower layers. They must develop an effective instrument standardization strategy along with people and product strategies.

Rather than overanalyzing the strategy and design decisions, engineers can start with the most obvious choices and expand the scope step by step.

Figure 5. Abstraction Layers in a Test Sequence

Hardware

Hardware design and instrument connectivity are critical parts of the validation cycle. Engineers must spend considerable time to achieve the most reliable data for specification compliance.

Engineers use hardware boards to map device under test (DUT) pins to instruments, custom measurements or signal conditioning circuits specific to the DUT, multiplexing instruments for channel reuse to enable multisite capability, and so on. A lack of standardization leads to re-creation of hardware boards for each product by each team, which is ineffective. A standardized hardware infrastructure can save a lot of hardware development and debugging time and costs.

The hardware board can be standardized to validate a family of devices with one base motherboard and a plug-in daughtercard for each device. This approach promotes reuse of same motherboard across a family of products. Only a daughtercard needs to be designed for each device, which reduces hardware board design and debugging time.

Family board standardization encompasses the following:

- A standard set of connectors for all the instruments in the setup

- A standard measurement or signal conditioning circuit at the motherboard level

- Sufficient hooks and test points for system-level debugging

- Switches for multiplexing the instruments to a daughtercard with the flexibility to accommodate product variants

- Hooks to connect instruments close to the DUT on the daughtercard

- A socket in the DUT hardware board for easy replacement of the DUT as required

Advantages of a standard hardware infrastructure include the following:

- Standard instrument connections, which reduce the hardware design time and cost substantially

- Effective reuse of software measurement modules across the product family

- Quick turnaround time for DUT-specific daughtercards

- Option to connect instruments close to the DUT for sensitive measurements without redesigning or maintaining dead inventory for each device validation

Specifications Tracking

In the NPD process, many teams work in parallel based on the latest version of the product specifications that is shared. But during the many months of development, the specifications are typically updated multiple times. A tool that ensures all the teams’ work is based on the latest set of specifications is invaluable and saves a lot of wasted effort and last-minute rework.

Data Management and Reporting

Validation engineers report that they spend 40% or more of their time on data analysis and report generation. This involves

- analyzing the data and checking it for compliance with the specifications,

- correlating it with the data generated in the other NPD stages,

- and generating the necessary compliance reports and the data sheet.

Given the amount of information generated across all the testing parameters, engineers can easily drown in the data they collect. Increased automation in data management and report generation can lead to substantial gains in productivity. This is possible by standardizing how specifications are written and how measurement data and its associated metadata are captured, stored, and analyzed. The benefits can range from simple—searching for and finding test data more readily—to more advanced—real-time specification compliance dashboards that are automatically updated as data is collected in the lab, and real-time alerts that are generated when the data collected looks anomalous or doesn’t agree with the simulation data. Features like automatic data sheet generation can also be added as part of these standardization efforts.

Strengthening Your Validation Community

Best Practices Sharing and Faster Support

As more users adopt a validation framework and its tools, the value of user forums grows exponentially (network effect). The online forums and in-person meetings of a user community deliver huge value through faster support, best practices sharing, brainstorming on tool improvement, and discussion of the latest user contributions to the code repository.

Training and Certification

When a set of standard tools and processes is widely used by many engineers, the organization can afford to invest in well-designed training materials that improve the onboarding process and reduce the training burden for the rest of the team. And when engineers move from one team to another, standardization ensures they can contribute immediately in their new positions. Certification also motivates engineers to learn the material well, which results in high-quality code established by the following best practices.

Using well-designed starting templates generally results in better code.

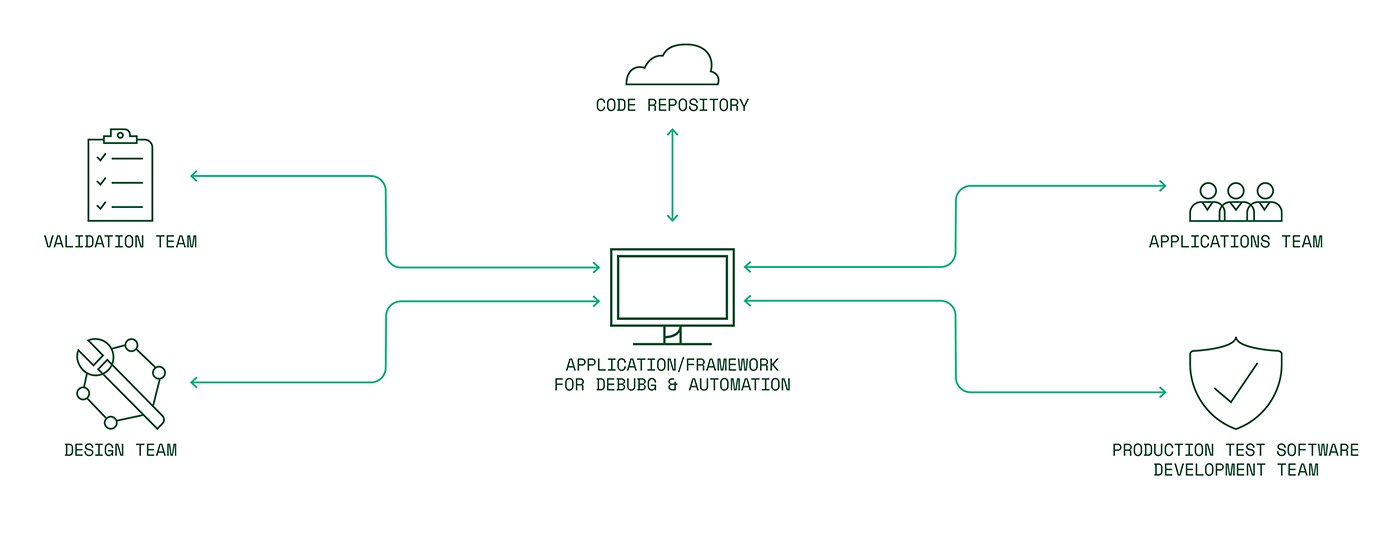

Collaboration with Other Disciplines

Test Methodology and Code Sharing

Many tests overlap among the simulation, validation, and production processes. But because the hardware and software tools used during these stages are different, the tests have traditionally been coded independently in separate environments. This prevents code sharing or reuse among the disciplines. By standardizing the tools throughout these stages, code reuse can be practiced by designers (involved in bring-up), validation engineers, reliability and standards compliance test engineers, applications engineers, and production test development engineers. This saves the time spent on documenting, communicating, and coding the same procedure in all the different stages.

Figure 6. Code Reuse Opportunities Created by the Overlap of Test Teams

Benefits from Characterization to Production

Many of the tests used in production are a subset of the tests used during characterization. Code portability between these two test environments saves significant engineering effort. Seamlessly transferring code from characterization to production can save as much as 80% of test program development time. This involves some fundamental changes to the way drivers are called and used in characterization. However, this complexity can be hidden behind the scenes, so validation engineers can proceed with their work as usual.

Correlation of Data

Data is generated in the NPD process in design simulation, design validation, and test program development. The data generated across these stages is correlated to ensure that the device is behaving as expected. Unfortunately, this process is typically hampered by data being stored in a variety of formats. Many manual steps are necessary to map corresponding data to each other and apply a format that can be compared and correlated. A tool that can map the data at each stage to the corresponding specifications and enable correlation across stages with minimal manual steps improves efficiency in the NPD process.

Collaboration across Product Lines

When engineers managing different product lines in an organization adopt a standard, open, and scalable lab validation infrastructure to build their code on, they no longer have to start from scratch. An effective solution created in one corner of the organization can quickly be adopted by other teams. The central code repository and the validation community’s knowledge sharing site offer great value that continues to grow over time for an organization.

Data Mining and Leveraging Machine Learning (ML) and Artificial Intelligence (AI)

When a standard method for capturing and storing data has been defined, the data can be mined for insights using data mining techniques. This data can also be used to train ML models for applications that may not be evident today. One key strategy of effective test organizations is to ensure their data assets are ready for upcoming powerful data mining and ML applications. In just a few years, an AI-based assistant could prompt a validation engineer with a message like “the anomaly in the waveforms that you just captured looks like what was seen in project XYZ, and the following was the root cause identified in that case.”

Modernize Your Lab with Help from NI + Soliton

NI and Soliton have decades of experience working together to help semiconductor customers improve efficiency and accelerate time to market. With more than 100 LabVIEW and TestStand engineers and more than 20 NI technical awards, Soliton provides highly differentiated engineering services that complement NI products and solutions. As an NI Semiconductor Specialty Partner for the Modern Lab, STS, and SystemLink™, Soliton specializes in developing purpose-built platform solutions for post-silicon validation that can substantially improve engineering productivity through powerful tools and standardization.

Taking the Next Step

- Learn how to modernize your lab with a validation framework architecture

- Learn more about Soliton’s services for the Modern Lab

- Contact Soliton

- Learn more about validation lab innovations

©2021 NATIONAL INSTRUMENTS. ALL RIGHTS RESERVED. NATIONAL INSTRUMENTS, NI, NI.COM, LABVIEW, AND NI TESTSTAND ARE TRADEMARKS OF NATIONAL INSTRUMENTS CORPORATION. OTHER PRODUCT AND COMPANY NAMES LISTED ARE TRADEMARKS OR TRADE NAMES OF THEIR RESPECTIVE COMPANIES.