ASAMize Your Measurement Data

When talking about measurement data, the main focus is usually on data stored in files. Such files are produced by a variety of measuring systems which store the information in different formats. The different file formats vary from simple comma-separated values (CSV) files to specific binary formats tailored for specific (measurement hardware) needs, from structured XML documents to binary channel dumps, or combinations and variations of the aforementioned. Each file format serves a specific purpose, including easier data exchange with spreadsheet tools (CSV), or high speed data streaming (TDMS); but also the lack of suitable standards for specific use cases generates the need for customized file formats.

The multiplicity of file formats presents a challenge once data analysis, report generation, visualization, or similar tools are required to further process the data files and store them in different formats to enable cross product data interchange. Considering X number of file formats and Y number of tools, the complexity of the problem is in the order of X by Y – meaning that more file formats or more tools will lead to an exponential growth of effort. The variety of formats, the velocity in which the data is acquired and stored, and the resulting data volume have been identified by National Instruments as the Big Analog DataTM problem.

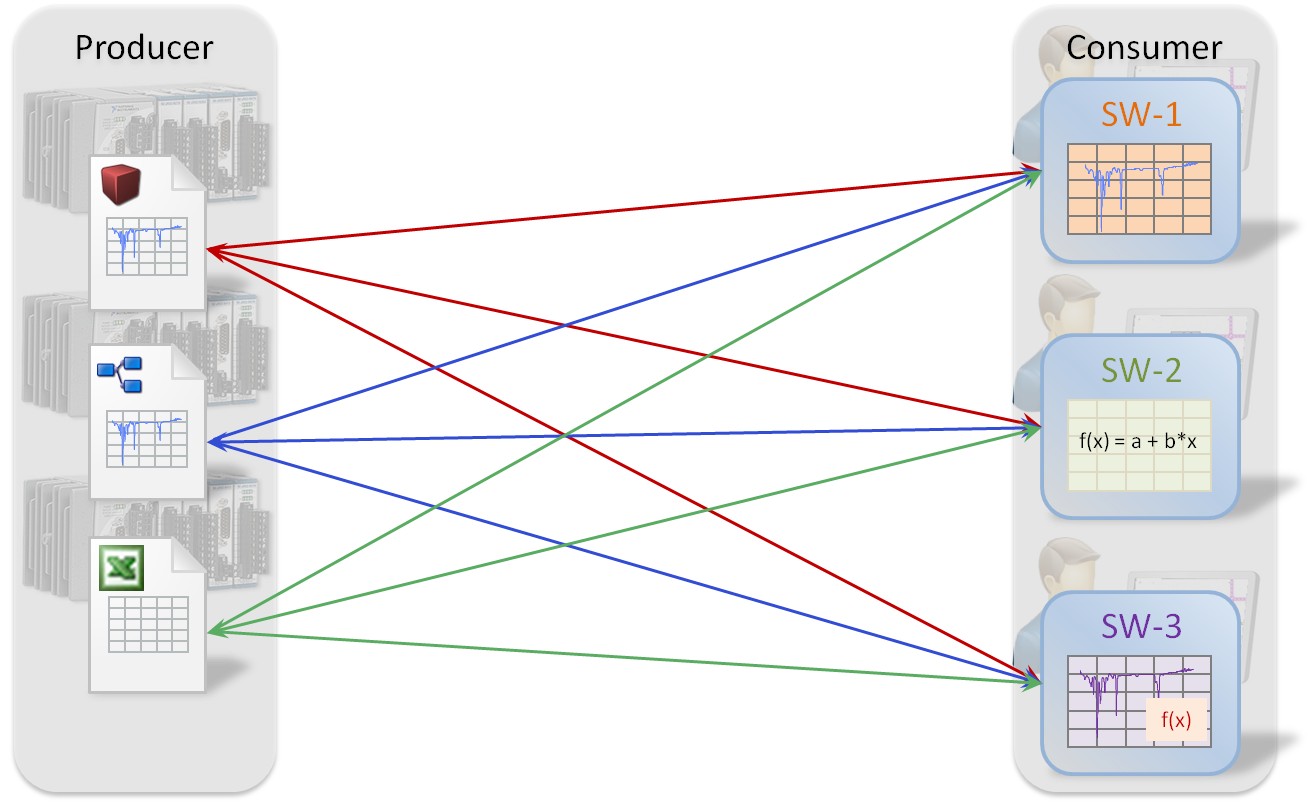

Figure 1: X file formats and Y reader tools increases the complexity of analyzing and reporting on data.

The key to the solution of this problem is to reduce the degrees of freedom while keeping the tool-chain alive. It seems unlikely that the variety of different file formats will disappear. There will always be this specific data producer with that specific need which cannot be fulfilled by a standard file format. So instead of forcing all data producers to write to the same file format, the desirable solution is the introduction of one intermediate data format (or data model) that is capable of accommodating the content (data) of the X different file formats. Or, in different words, a format to which all other data formats can be mapped or converted.

Using a standard application programming interface (API) for reading the intermediate data reduces the complexity of the system to the order of X + 1, especially as many data consumers will have already implemented the API.

With a common technology for mapping measurement data files to an intermediate data model, a Big Analog DataTM solution is born which can help to increase gains in visibility and value.

National Instruments has introduced such a solution by offering the DataPlugin technology to map measurement files to a suitable data model: TDM. Indexing those files using the SystemLink TDM DataFinder Module allows the files to be retrieved through parametric and full-text search, and, the indexed data is made accessible by a standard interface: ASAM ODS.

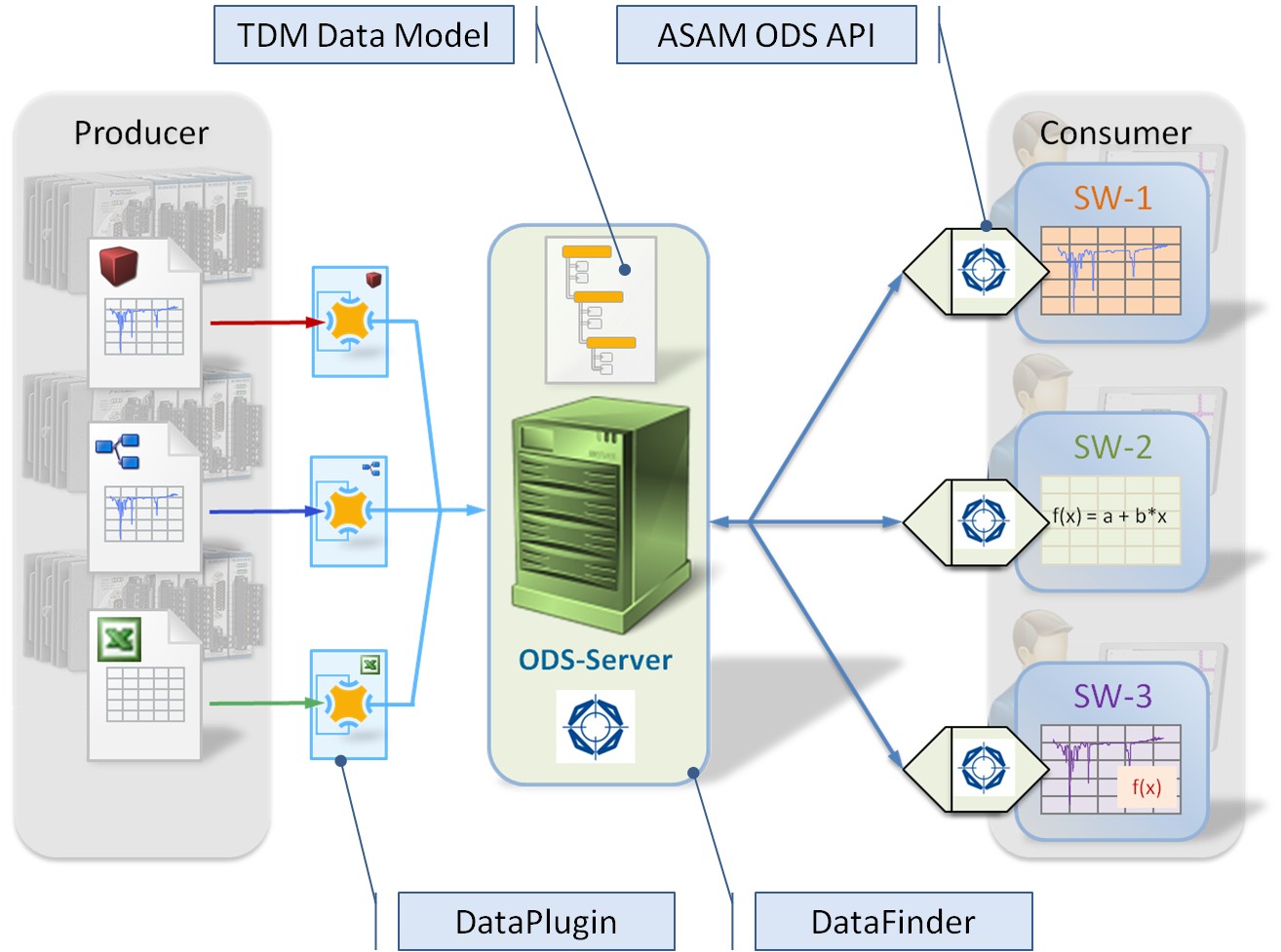

Figure 2: Any file format can be mapped to the TDM model, with a technology called DataPlugins, where then a single tool can be used to access and analyze various data sources.

Let’s investigate more of the solution on offer by exploring the different components:

TDM Data Model

The TDM data model is a simple but suitable and flexible data model for measurement data. It is derived from the ASAM ODS base model while focusing on minimum complexity.

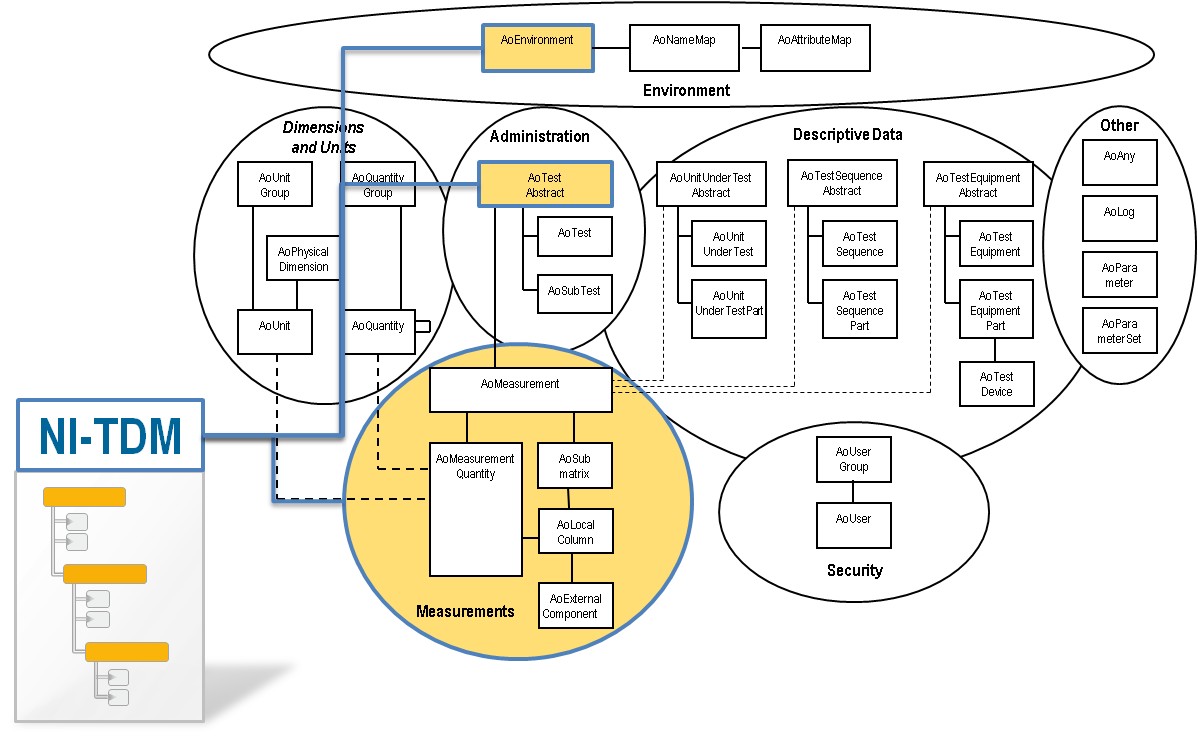

Figure 3: A depiction of the complete ASAM ODS base model and the three levels that the TDM model focuses on to minimize complexity.

The three-level hierarchy of the TDM model allows organizing your channels into groups and then organizing the different groups under a single root. On each of the three layers you can store an unlimited number of custom-defined properties. You can learn more about the benefits of the TDM model and the internal structure.

DataPlugins

A DataPlugin is the programmatic description of how to map any custom file format to the TDM data model. Most DataPlugins are written in VBScript because of the existing APIs to access textual, binary or spreadsheet files, but there are also APIs for C++ or LabVIEW. NI DIAdem also offers wizards to create your own DataPlugins. Before starting to write your own DataPlugin, search ni.com/dataplugins to find out whether a DataPlugin for your specific file format already exists and is available for a free web download.

ASAM ODS

ASAM stands for “Association for the Standardization of Automation and Measuring Systems” and ODS for “Open Data Services”.

ASAM ODS is an international (automotive) industry standard to store measurement data. National Instruments, as one of the co-founders of ASAM and an active member of the ASAM ODS working group, has introduced the ASAM ODS standard even outside the automotive world to the measurement industry. ASAM ODS defines the storage of the data, for instance, in an Oracle or mixed-mode server and a Corba-based API for data access. The major benefit is the specification of a so-called base data model which adds semantic (meta-) information to the data.

The TDM data model is derived from the ASAM ODS base model. In the context of ASAM ODS, the TDM+ data model additionally offers a custom-defined hierarchy of arbitrary depth derived from the existing (meta) data and an extendible unit catalog while keeping the TDM data model (and the measurement files) unchanged.

Both Corba API and TDM+ data model are part of DataFinder Server Edition.

DataFinder

NI SystemLink™ TDM DataFinder Module is a centralized data management software for handling the large amounts of data generated during testing and simulation. SystemLink TDM DataFinder Module works out-of-the-box by indexing test files either on a server or over a network with no need for IT support or database knowledge. The data index builds and scales automatically as your test files change.

DataFinder allows accessing the indexed data either through the original files by using DIAdem or LabVIEW DataFinder Toolkit, or through an ASAM ODS Corba API.

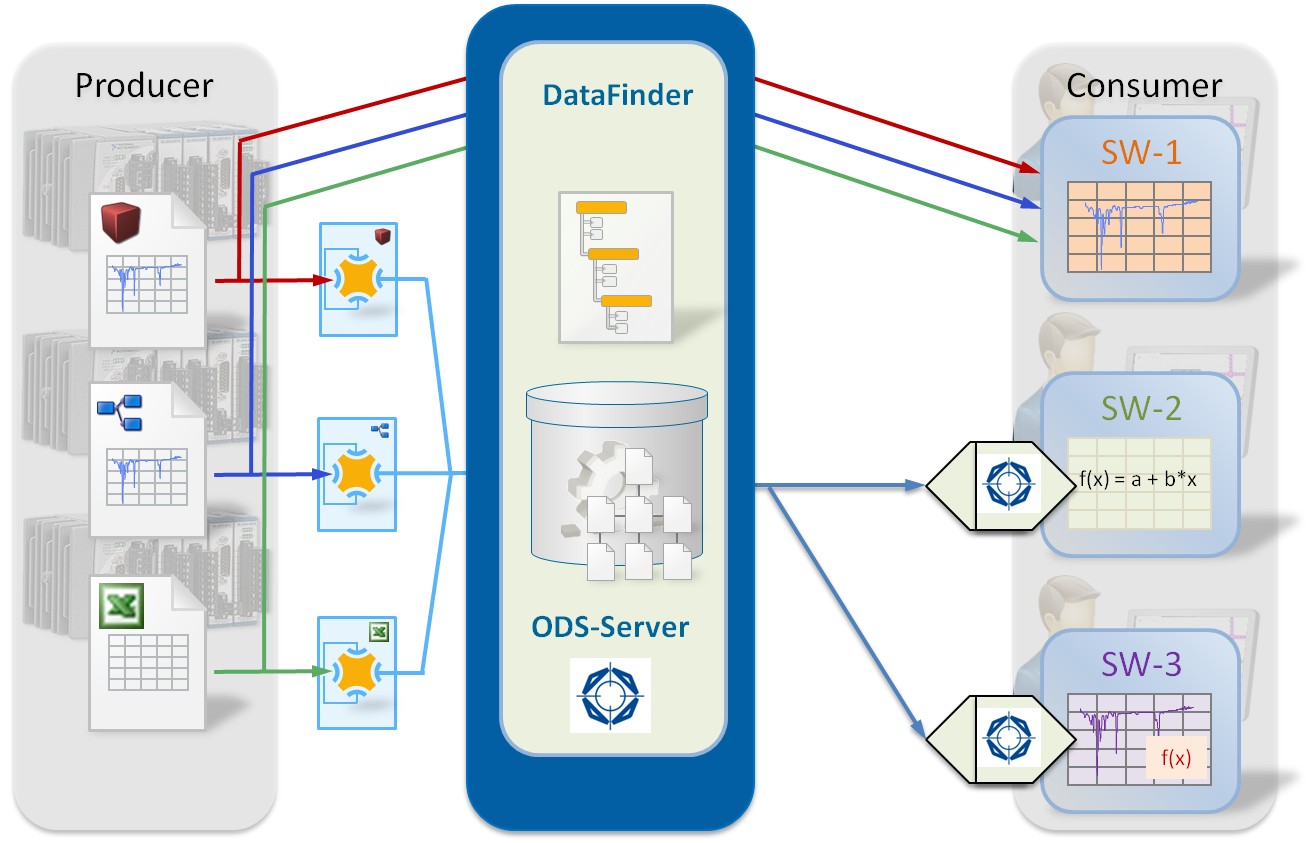

Figure 4: Providing both a file-based interface and an ASAM ODS interface, DataFinder easily integrates into existing processes and adds value and visibility to the data.

Additional Resources

- Learn more about the ASAM ODS Standard

- Learn more about setting up an ASAM ODS Server with NI DataFinder