3D Imaging with NI LabVIEW

Overview

Contents

- Introduction to 3D Imaging

- Stereo Vision Functions in Vision Development Module

- How Stereo Vision Works

- Stereo Vision Applications

- Summary and Next Steps

Introduction to 3D Imaging

There are several ways to calculate depth information using 2D camera sensors or other optical sensing technologies. Below are brief descriptions of the most common approaches:

| 3D Imaging Technology | Description |

| Stereo Vision | Two cameras are used, mounted with different perspectives of an object, and calibration techniques are used to align pixel information between the cameras and extract depth information. This is most similar to how our brains work to visually measure distance. |

| Laser Triangulation | A laser line is projected onto an object and a height profile is generated by acquiring the image using the camera and measuring displacement in the laser line across a single slice of the object. The laser and camera scan though multiple slices of the object surface to eventually generate a 3D image. |

| Projected Light | A known light pattern is projected onto an object and depth information is calculated by the way the pattern is distorted around the object. |

| Time of Flight Sensors | A light source is synchronized with an image sensor to calculate distance based on the time between the pulse of light and the reflected light back onto the sensor. |

| Lidar | Lasers are used to survey an area by measuring reflections of light, and generating a 3D profile to map out surface characteristics and detect objects. |

| Optical Coherence Tomography (OCT) | A high resolution imaging technique that uses near infrared light to calculate depth information by measuring reflections of light through the cross section of an object. This is most commonly used in medical imaging applications, because of the non-invasive ability to penetrate multiple layers of biological tissue. |

Stereo Vision Functions in Vision Development Module

Starting with LabVIEW 2012, Vision Development Module now includes binocular stereo vision algorithms to calculate depth information from multiple cameras. By using calibration information between two cameras, the new algorithms can generate depth images, providing richer data to identify objects, detect defects, and guide robotic arms on how to move and respond.

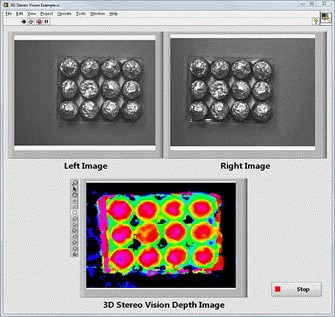

A binocular stereo vision system uses exactly two cameras. Ideally, the two cameras are separated by a short distance and are mounted almost parallel to one another. In the example shown in Figure 1, a box spherical chocolates is used to demonstrate the benefits of 3D imaging for automated inspection. After calibrating the two cameras to know the 3D spatial relationship, such as separation and tilt, two different images are acquired to locate potential defects in the chocolate. Using the new 3D Stereo Vision algorithms in the Vision Development Module, the two images can be combined to calculate depth information and visualize a depth image.

Figure 1: Example of depth image generated from left and right images using Stereo Vision

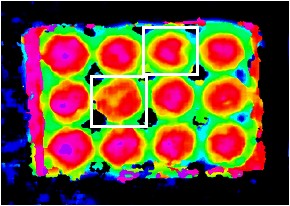

While it’s less apparent in the 2-dimensional images, the 3D depth image shows that two of the chocolates are not spherical enough to pass the high-quality standards. The image in Figure 2 shows a white box around the defects that have been identified.

Figure 2: 3D Depth Image with White Boxes Around the Defective Chocolates

One important consideration when using stereo vision is that the computation of the disparity is based on locating a feature from a line of the left image and the same line of the right image. To be able to locate and differentiate the features, the images need to have sufficient texture, and to obtain better results, you may need to add texture by illuminating the scene with structured lighting.

Finally, binocular stereo vision can be used to calculate the 3D coordinates (X,Y,Z) of points on the surface of an object being inspected. These points are often referred to as point clouds or cloud of points. Point clouds are very useful in visualizing the 3D shape of objects are can also be used by other 3D analysis software. The AQSense 3D Shape Analysis Library (SAL3D), for example, is now available in the LabVIEW Tools Network, and uses a cloud of points for further image processing and visualization.

How Stereo Vision Works

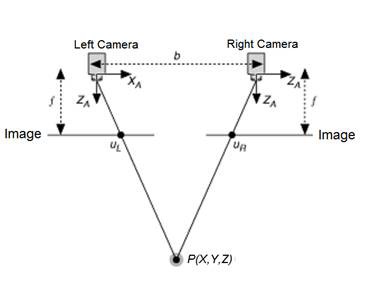

To better illustrate how binocular stereo vision works, Figure 3 shows the diagram of a simplified stereo vision setup, where both cameras are mounted perfectly parallel to each other, and have the exact same focal length.

Figure 3: Simplified Stereo Vision System

The variables in Figure 3 are:

b is the baseline, or distance between the two cameras

f is the focal length of a camera

XA is the X-axis of a camera

ZA is the optical axis of a camera

P is a real-world point defined by the coordinates X, Y, and Z

uL is the projection of the real-world point P in an image acquired by the left camera

uR is the projection of the real-world point P in an image acquired by the right camera

Since the two cameras are separated by distance “b”, both cameras view the same real-world point P in a different location on the 2-dimensional images acquired. The X-coordinates of points uL and uR are given by:

uL = f * X/Z

and

uR = f * (X-b)/Z

Distance between those two projected points is known as “disparity” and we can use the disparity value to calculate depth information, which is the distance between real-world point “P” and the stereo vision system.

disparity = uL – uR = f * b/z

depth = f * b/disparity

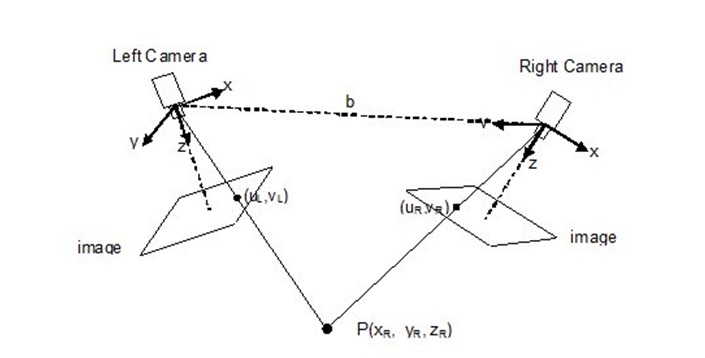

In reality, an actual stereo vision set-up is more complex, would look more like the typical system shown in Figure 4, but all of the same fundamental principles still apply.

Figure 4: Typical Stereo Vision System

The ideal assumptions made for the simplified stereo vision system cannot be made for real-world stereo vision applications. Even the best cameras and lenses will introduce some level of distortion to the image acquired, and in order to compensate, a typical stereo vision system also requires calibration. The calibration process involves using a calibration grid, acquired at different angles to calculate image distortion as well as the exact spatial relationship between the two cameras. Figure 5 shows the calibration grid included with the Vision Development Module.

Figure 5: A calibration grid is included as a PDF file with the Vision Development Module

The Vision Development Module includes functions and LabVIEW examples that walk you through the stereo vision calibration process to generate several calibration matrices that are used in further computations to calculate disparity and depth information. You can then visualize 3D images as shown earlier in Figure 1, as well as perform different types of analysis for defect detection, object tracking, and motion control.

Stereo Vision Applications

Stereo vision systems are best suited for applications in which the cameras settings and locations are fixed, and won’t experience large disturbances. Common applications include navigation, industrial robotics, automated inspection and surveillance.

Navigation | Autonomous vehicles use depth information to measure the size and distance of obstacles for accurate path planning and obstacle avoidance. Stereo vision systems can provide a rich set of 3D information for navigation applications, and can perform well even in changing light conditions. |

Industrial Robotics | A stereo vision system is useful in robotic industrial automation of tasks such as bin picking or crate handling. A bin-picking application requires a robot arm to pick a specific object from a container that holds several different kinds of parts. A stereo vision system can provide an inexpensive way to obtain 3D information and determine which parts are free to be grasped. It can also provide precise locations for individual products in a crate and enable applications in which a robot arm removes objects from a pallet and moves them to another pallet or process. |

Automated Inspection | 3D information is also very useful for ensuring high quality in automated inspection applications. You can use stereo vision to detect defects that are very difficult to identify with only 2-dimensional images. Ensuring the presence of pills in a blister pack, inspecting the shape of bottles and looking for bent pins on a connector are all examples of automated inspection where depth information has a high impact on ensuring quality. |

Surveillance | Stereo vision systems are also good for tracking applications because they are robust in the presence of lighting variations and shadows. A stereo vision system can accurately provide 3D information for tracked objects which can be used to detect abnormal events, such as trespassing individuals or abandoned baggage. Stereo vision systems can also be used to enhance the accuracy of identification systems like facial recognition or other biometrics. |

Summary and Next Steps

The stereo vision features in the LabVIEW Vision Development Module bring new 3D vision capabilities to engineers in a variety of industries and applications areas. Through the openness of LabVIEW, engineers can also use third -party hardware and software 3D vision tools for additional advanced capabilities, including the SICK 3D Ranger Camera for laser triangulation imaging and the AQSense 3D Shape Analysis Library for 3D image processing. The LabVIEW Vision Development Module makes 3D vision accessible to engineers in one graphical development environment.

Next Steps:

- Download and evaluate Vision Development Module

- Learn more about Vision Development Module

- Acquire 3D and MultiScan Data from SICK 3D Cameras

- Access certified, third-party add-ons with the LabVIEW Tools Network

Reference Material: