Own Test Architecture to Meet Ever-Growing Test Coverage

Overview

Automotive consumers worldwide are increasingly basing their purchase decisions on the vehicle’s user interface, or human-machine interface (HMI). An in-vehicle infotainment (IVI) or car multimedia system, together with a digital cockpit, heavily influences how drivers and passengers feel about vehicles. And while HMI-related automotive electronics account for 70 percent of all in-car code, ever-increasing new features such as augmented reality head-up displays and over-the-air programming make it harder to quickly validate systems and production infrastructure. Successful automotive OEMs and Tier 1 suppliers have transformed their test organizations into strategic assets to improve differentiation and compete in the market.

Contents

- Optimize Test Organization to Remain Competitive

- Why Universal and Superset Testers Are Not the Answer

- People, Process, and Technology

- How Industry Convergence Affects Test Architecture

- Conclusion

- Additional Resources

Optimize Test Organization to Remain Competitive

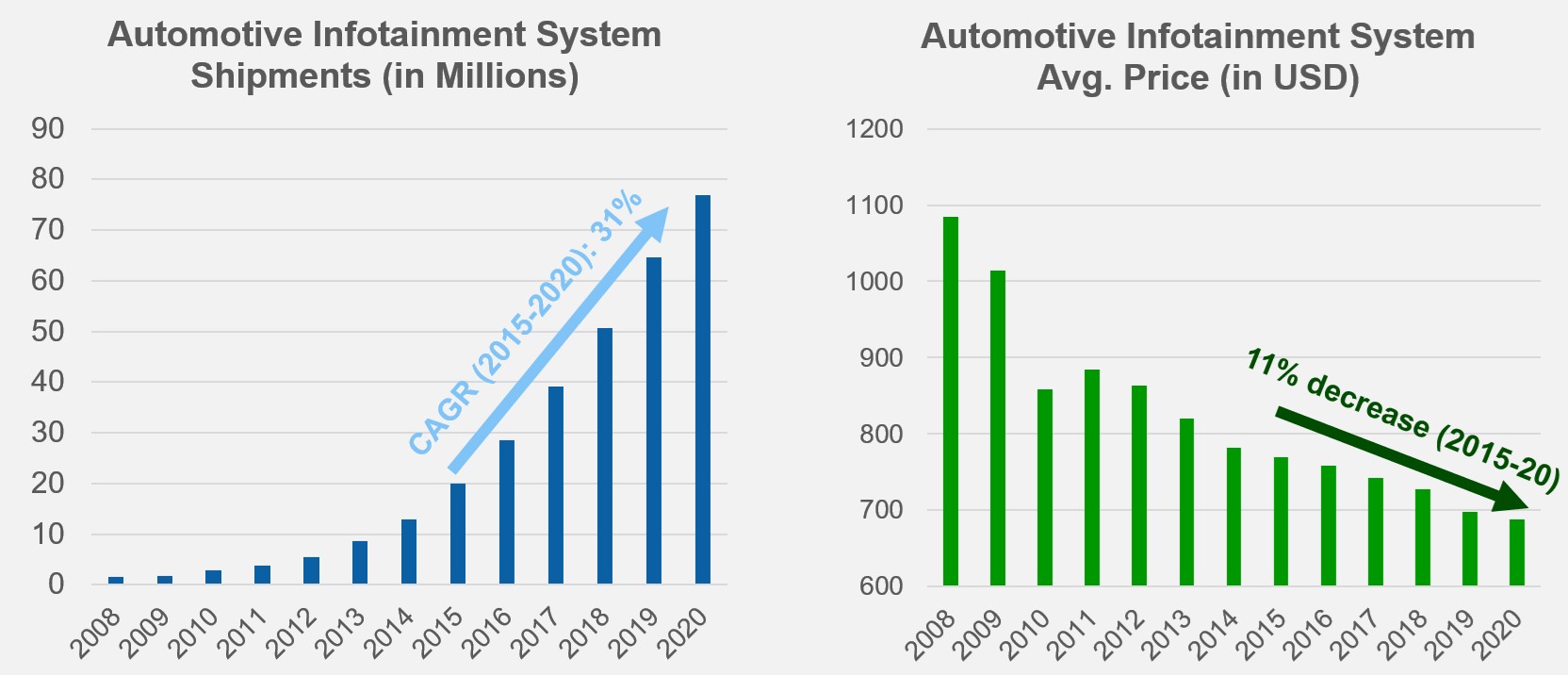

The IVI and car multimedia market is growing: Infotainment systems are popular among all vehicle types. Noncore players such as electronics and software companies seek to capitalize on their popularity, participating in this market and decreasing infotainment system average selling price (ASP). However, an HMI system should both integrate with other subsystems and retain quality to remain competitive; therefore, it is imperative to optimize test organizations and ensure operational efficiency without sacrificing quality.

Figure 1: Lowering test cost is crucial to face fierce competition and decreased ASP [1].

Why Universal and Superset Testers Are Not the Answer

Universal or superset testers are alluring, but they rarely succeed. Without clearly defined pain points, you might focus only on technology and treat standardization as a single technical client, disappointing leadership and internal stakeholders. Unless you adopt the standard across multiple groups and internal customers or end users, you cannot achieve results and the expected return on investment (ROI). Universal or superset testers could be too rigid and overdesigned, failing to span product life cycles without scalability beyond product coverage. Instead, an automated test architecture’s guiding principle and strategy align test organizations so they can reuse test assets and components for dynamic resource utilization. An automated test architecture spans the product life cycle, reducing quality costs and positively impacting company financials by getting better products to market faster. Historically, product testing plays a support function during development and manufacturing (as simply a necessary cost center); however, owning test architecture is the very first step to elevate the test engineering function from a cost center to a strategic asset.

The standardization process includes a life cycle, and it is crucial to treat a test architecture or a test standard as a product, aligning its roadmap with internal stakeholders. This is how test engineering organizations become strategic assets: Creating standard test platforms, developing valuable test-based intellectual property, delivering a more productive workforce while lowering operational costs, and aligning with the business objectives by continually contributing to better product margins, quality, and time to market.

Figure 2: About 70 percent of standardization efforts fail, most commonly with the yellow-arrow scenario; some people have tried, but stagnated, and keep operating in the same way [2].

People, Process, and Technology

To standardize on a test architecture, examine your people, process, and technology (or technical) elements:

- People—To build proficient, effective teams, establish a center of excellence (CoE). Adopting a standard is the most challenging and crucial step, and a CoE works as a core team that owns and promotes the internal standard or test architecture across multiple groups and the product development cycle. The CoE should seek input and buy-in from internal end users such as test engineers and utilize test standard expertise to manage external engineering resources and speed tester development.

- Process—Treating the internal test standard or architecture as a product is key in leading standardization efforts and improving productivity with faster test code development and increased test asset utilization. Standardization is not a single, technical exercise; rather, its life cycle evolves as end-user requirements and new technology continue to align. Because test strategy and standardization are so impactful, it is critical to first develop a roadmap. A roadmap uses business metrics to drive change and highlight early successes, helping secure senior management sponsorship and leading to adoption and optimization. Learn how Mazda wisely planned an electrical and electronic automated test equipment roadmap.

- Technology (Technical)—You can introduce an inclusive financial model to compare and quantify results, leading to successful buy-in and ROI measurement throughout standardization efforts. For example, information technology (IT) originally performed support functions such as standard computing, data storage, and routine task automation. With total cost of ownership (TCO), IT has become a strategic asset in smarter investment decisions, and, in leading organizations, can now streamline critical line-of-business processes and help executives make real-time core business decisions. Likewise, TCO has effectively affected test standardization efforts and uncovered hidden costs that you can optimize throughout internal test standard adoption. Learn how to model TCO with this guide.

Figure 3: Best-in-class organizations improve integration by focusing on people, process, and technology changes [3].

How Industry Convergence Affects Test Architecture

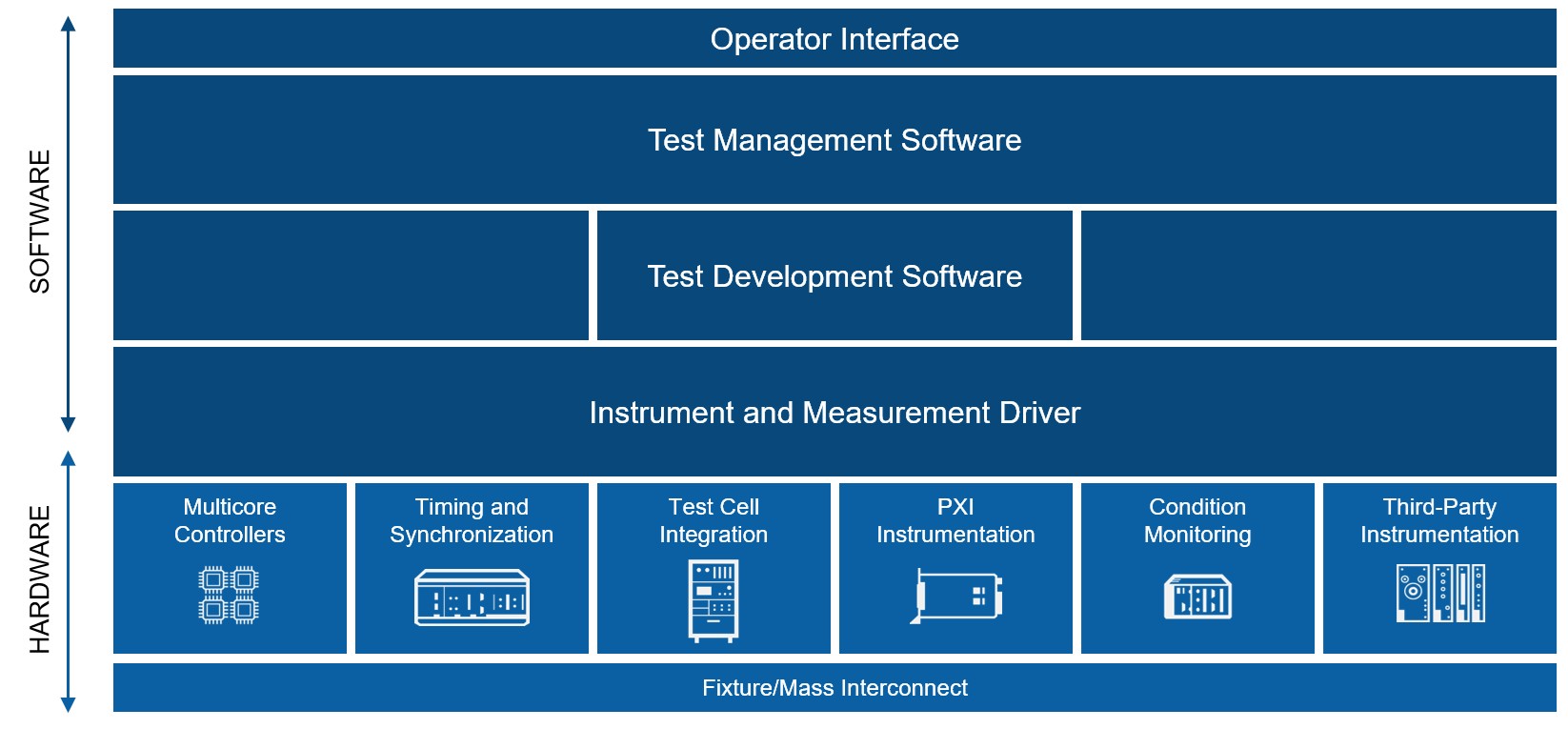

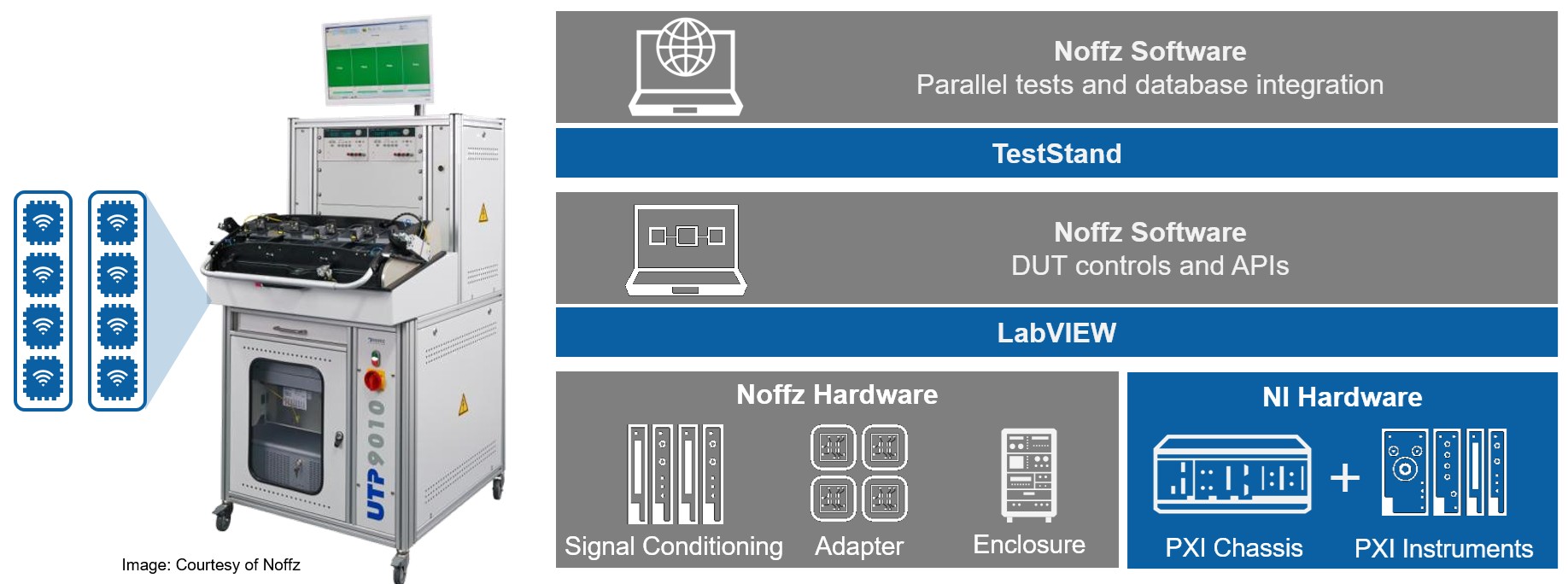

According to the 2014 Gartner report, Industry Convergence—The Digital Industrial Revolution, “industry convergence represents the most fundamental growth opportunity for organizations.” [4] Test organizations can learn from other industries and pool resources to accelerate innovation. We see increasing technology convergence, such as wireless standards, in IVI and car multimedia systems. Best-in-class automotive companies use proven semiconductor and mobile-industry test architecture. A vendor-agnostic, software-defined automated test architecture offers extreme software or instrumentation and test system flexibility with separate software and hardware layers and two primary, distinct software layers—test management and test development. The test management software layer, or the test executive, separates test responsibilities from the test development software layer. The test executive can execute and deploy test sequences or scenarios and call almost any piece of test code using software such as LabVIEW, C++, or Python, which helps easily unify current and legacy test code into one test sequence. It can also test in parallel and generate results or log to a variety of databases. An out-of-the-box, industry-leading test executive, TestStand, utilizes flexible modular hardware architecture to accommodate a high I/O mix, while PXI has become a dominant player and the fastest-growing modular automated test standard in the world.

Figure 4: Software-Defined Automated Test Architecture

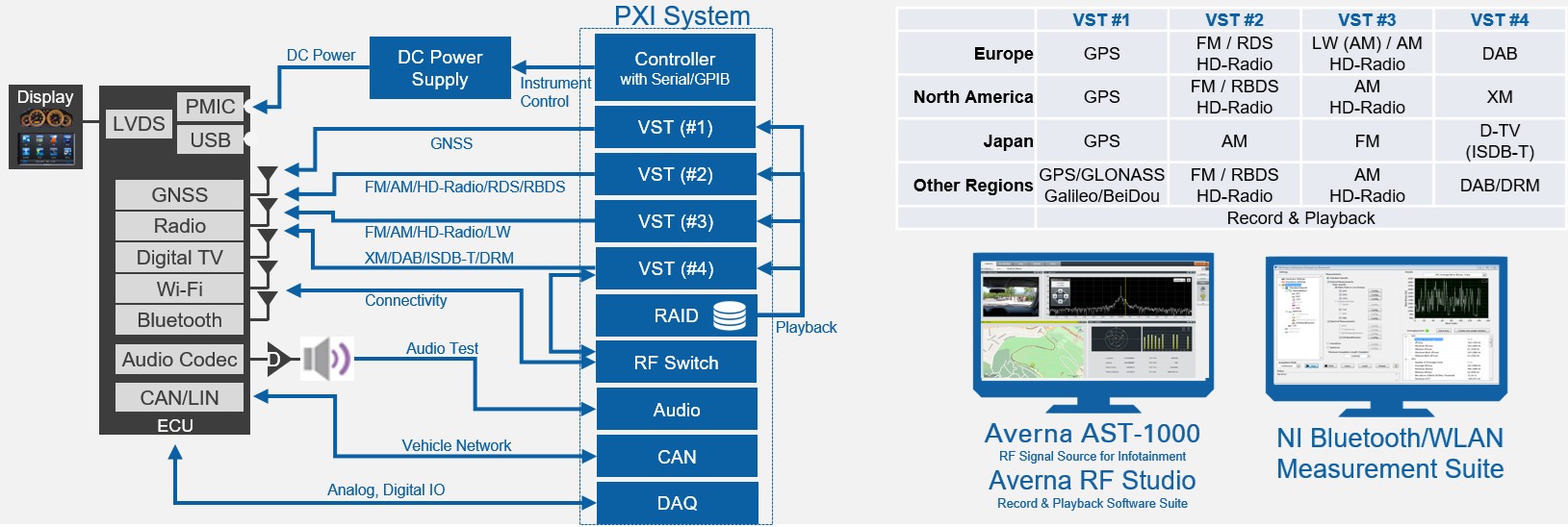

Once you define your standard test architecture, assess available aligning market solutions to keep pace with the ever-changing technology market. For example, to build an RF test rig for IVI validation test, you need multiple radio-, navigation-, multimedia-, and connectivity-testing RF generators. With PXI-based RF instrumentation modularity, as well as third-party signal generation software such as Averna AST-1000, you can develop and manage a flexible IVI RF test rig in a shorter time. Using flexible and modular test architecture, you can incorporate further automation, signal simulation, and cost optimization: Add RF switch modules to support multiple devices under test and use the Redundant Array of Independent Disks (RAID) record-and-playback feature to replicate real-world RF signals acquired in the field.

Figure 5: RF Test Rig System Diagram

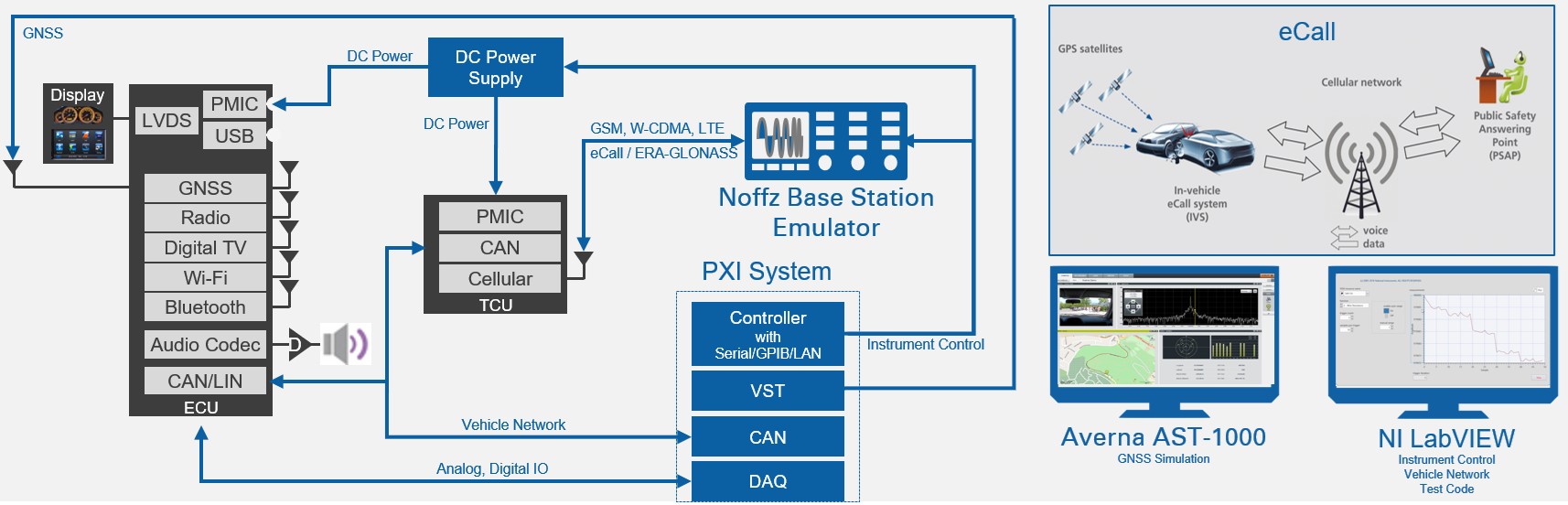

If you need to add telematics or V2X emergency call (eCall) test requirements, you can incorporate a base station emulator such as NOFFZ sUTP 5017 BSE into the test rig, as shown in the figure below.

Figure 6: Telematics Test Rig System Diagram

Because you own the test architecture, you can customize the test system according to your requirements, and you can quickly meet basic test specifications because the ecosystem is tied to the software-defined automated test architecture. You should be able to meet the following specifications for testing IVI or car multimedia systems based on the NI PXI platform, which includes the software-defined automated test architecture.

Test Components | Specifications |

Radio* | AM/FM, DAB/DAB+/DMB, DRM, HD Radio (IBOC), RDS/RDBS (1 Channel or 3 Channels), TMC-RDS, SiriusXM |

Navigation* | BeiDou, Galileo, GLONASS, GPS, QZSS |

Video* | ATSC, CMMB, DTMB, DVB-T, DVB-T2, ISDB-T |

Connectivity/Cellular* | Bluetooth, Wi-Fi, WCDMA, LTE, 5G NR |

Record and Playback* | Capture Real-World RF Spectra like GNSS and Radio |

Audio | Distortion (THD, SINAD, IMD), Octave, Level, Quality |

Base Station Emulation** | MIMO, Handover for LTE and Cat 16, GSM, WCDMA, eCall |

*Provided by Averna AST-1000 and RF Studio

**Provided by NOFFZ sUTP 5017

Figure 7: NI PXI Platform IVI and Telematics Test Specifications

An NI automated test ecosystem spans not only RF test but also audio, video, automotive bus, NFC and wireless charging, machine vision, and motion control to meet evolving test requirements and automation needs.

Even if you need to completely outsource tester development, you can confidently manage the test system because it’s based on your own test architecture. The test architecture becomes a guiding principle or common language between your test organization and system integration partner, clarifying who owns which portion or layer from initial development through tester life-cycle management.

Figure 8: This Harman automotive infotainment production tester uses NOFFZ products based on NI software-defined test architecture.

Conclusion

As ever-increasing new features make it more challenging to validate a system and keep production on time, you may ask, “Do I trust my instrument vendor to innovate fast enough for my business needs?” More importantly, is it worth risking your business to find out? Whether you are buying or building your next test system, it is crucial to own the test architecture. Successful automotive OEMs and Tier 1 suppliers that have transformed a test organization into a strategic asset to improve decision making and remain competitive in the market started by owning their standard test architecture. Those companies have proven that a software-defined automated test architecture provides a guiding principle and strategy that align test organizations to reuse test assets and components, dynamically utilize resources, and span the product life cycle, which reduces quality costs and positively impacts company financials by getting better products to market faster.

Additional Resources

- Case Study: Advancing Electrification of Mazda Vehicles Through Software-Defined Automated Test System With 90% Test Cost Reduction

- Infotainment Testing and V2X Ecosystem

- Averna AST-1000 All-in-One Signal Source

- S.E.A. V2X Test Solution

- NOFFZ sUTP 5017 BSE

References

[1] “Automotive Infotainment,” ABIresearch, accessed February 4, 2019, https://www.abiresearch.com/market-research/product/1018679-automotive-infotainment/.

[2] “Why Test Standardizations Fail,” Augusto Mandelli and Earle Donaghy, NI Business and Technology Assessment Team, accessed February 4, 2019, https://forums.ni.com/t5/NI-Test-Leadership-Council-Forum/TLF-2017-Presentation-Why-Test-Standardizations-Fail-and-What-to/gpm-p/3785320.

[3] “Automated Test Outlook 2011,”accessed February 4, 2019, http://www.ni.com/gate/gb/GB_INFOTESTTRENDS1/US.

[4] “Industry Convergence—The Digital Industrial Revolution,” Thilo Koslowski, Gartner, accessed February 4, 2019, https://www.gartner.com/doc/2684516/industry-convergence--digital-industrial.