Pattern Matching Techniques

- Updated2023-05-01

- 10 minute(s) read

Pattern Matching Techniques

NI Vision implements two pattern matching methods - pyramidal matching and image understanding (low discrepancy sampling). Both methods use normalized cross-correlation as a core technique.

The pattern matching process consists of two stages: learning and matching. During the learning stage, the algorithm extracts gray value and/or edge gradient information from the template image. The algorithm organizes and stores the information in a manner that facilitates faster searching in the inspection image. In NI Vision, the information learned during this stage is stored as part of the template image.

During the matching stage, the pattern matching algorithm extracts gray value and/or edge gradient information from the inspection image (corresponding to the information learned from the template). Then, the algorithm finds matches by locating regions in the inspection image where the highest cross-correlation is observed.

Normalized Cross-Correlation

Normalized cross-correlation is the most common method for finding a template in an image. Because the underlying mechanism for correlation is based on a series of multiplication operations, the correlation process is time consuming. Technologies such as MMX allow for parallel multiplications and reduce overall computation time. To increase the speed of the matching process, reduce the size of the image and restrict the region of the image in which the matching occurs. Pyramidal matching and image understanding are two ways to increase the speed of the matching process.

Challenges in Scale-Invariant and Rotation-Invariant Matching

Normalized cross-correlation is a good technique for finding patterns in an image when the patterns in the image are not scaled or rotated. Typically, cross-correlation can detect patterns of the same size up to a rotation of 5° to 10°. Extending correlation to detect patterns that are invariant to scale changes and rotation is difficult.

For scale-invariant matching, you must repeat the process of scaling or resizing the template and then perform the correlation operation. This adds a significant amount of computation to your matching process. Normalizing for rotation is even more difficult. If a clue regarding rotation can be extracted from the image, you can simply rotate the template and perform the correlation. However, if the nature of rotation is unknown, looking for the best match requires exhaustive rotations of the template.

By employing a coarse-to-fine approach to matching, and by using pyramids or image understanding, we can eliminate a significant amount of computation and achieve usable search times for handling rotated patterns. However, scale-invariant matching is not supported even when using pyramids or image understanding.

Pyramidal Matching

You can improve the computation time of pattern matching by reducing the size of the image and the template. In pyramidal matching, both the image and the template are sampled to smaller spatial resolutions using Gaussian pyramids. This method samples every other pixel and thus the image and the template can both be reduced to one-fourth of their original sizes for every successive pyramid level.

In the learning phase, the algorithm automatically computes the maximum pyramid level that can be used for the given template, and learns the data needed to represent the template and its rotated versions across all pyramid levels. The algorithm attempts to find an optimal pyramid level (based on an analysis of template data) which would give the fastest and most accurate match. Two kinds of data can be used - gray value (based on pixel intensities) and gradients (based on select edge information).

Gray Value Method

This method makes use of the normalized pixel gray values as features. Doing so ensures that no information is left out, which is helpful when the template does not contain structured information, but has intricate textures or dense edges. However, this method has the disadvantage of suffering when faced with occlusion and non-uniform illumination changes. Despite these limitations, the method works over a wide variety of scenarios and is suitable for general use.

Gradient Method

This method makes use of filtered edge pixels as features. An edge image is computed from the supplied grayscale image and a gradient intensity threshold is computed based on image analysis of the template. All edge vectors which are stronger than the threshold are retained as features. Matching is based on vector correlation rather than normalized cross-correlation. This method is more resistant to occlusion and lighting intensity changes as compared to the gray value based method, and is often faster, since less data must be computed. However, as the strength and reliability of edges reduces at very low resolutions, this method requires the user to work at higher resolutions compared to the Gray Value method.

Coarse-to-Fine Matching

The matching phase makes use of a coarse-to-fine approach, starting our search at the lowest resolution possible (the highest pyramid level). Since the sizes of the search image and template have been significantly reduced at this resolution, we can carry out an exhaustive correlation-based search. However, the sub-sampling process introduces some loss of details, and the match locations are not completely reliable. This problem is offset by maintaining a collection of promising candidate match locations with the best scores, rather than choosing the exact number of matches to look for.

We then iterate through each of the lower levels of the pyramid, refining our choice at each stage by re-computing correlation scores. This approach limits all subsequent searches to small localized regions around the best match candidates, achieving a significant speed boost.

When searching for rotated matches, performing an exhaustive match for all possible rotations (from 0 to 360 degrees) is still prohibitively expensive, even at the lowest resolutions. Consequently, we first exhaustively find the best locations at a coarse angle step. The best locations among these coarse locations are then refined at a finer angle step size. After this, we follow the same method as above by refining the match location as well as angle over the subsequent lower pyramid levels.

Tips and Tricks

Follow these recommendations to obtain the best performance from pyramidal matching:

- Use the highest possible pyramid level while choosing the Max Match Pyramid Level setting for the fastest execution times. In most cases, matching at level 0 or level 1 might be too expensive.

- While searching for rotated patterns, use the Angle Ranges setting to limit the search to the smallest angle range for faster performance and lesser memory consumption. For example if the match is known to be only slightly rotated from the base position, an angle range of -10° to 10° might suffice.

- The algorithm automatically handles the coarse-to-fine matching based on the Number of Matches Requested and Minimum Match Score. Configure them to obtain the best mix of speed and accuracy for your application.

- The Minimum Contrast setting specifies a minimum contrast value a region must exhibit to be considered as a candidate. Use this for getting a speed boost in cases where there are significant areas of low or zero contrast (uniform regions) in the image background.

- If you wish to find potential matches which may lie partially outside the image or the Region of Interest, switch the Process Border Pixels setting to on. For larger templates with a well-defined Region of Interest, you may get a slight speed boost by turning this setting off.

- Use the Min Match Separation Distance, Min Match Separation Angle and Max Match Overlap settings to completely control the distance, angular resolution, and overlap between found matches.

Image Understanding (Low Discrepancy Sampling)

A pattern matching feature is a salient pattern of pixels that describe a template. Because most images contain redundant information, using all the information in the image to match patterns is time-insensitive and inaccurate.

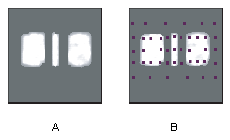

NI Vision uses a non-uniform sampling technique that incorporates image understanding to thoroughly and efficiently describe the template. This intelligent sampling technique specifically includes a combination of edge pixels and region pixels as shown in figure B. NI Vision uses a similar technique when the user indicates that the pattern might be rotated in the image. This technique uses specially chosen template pixels whose values—or relative changes in values—reflect the rotation of the pattern.

Intelligent sampling of the template both reduces the redundant information and emphasizes the feature to allow for an efficient, yet robust, cross-correlation implementation. NI Vision pattern matching is able to accurately locate objects that vary in size (±5%) and orientation (between 0° and 360°) and that have a degraded appearance.

Pyramidal Matching

Similar to pyramidal matching, sampling based matching also employs a coarse-to-fine approach to eliminate excessive computation. First, coarse features are extracted based on region pixel samples. Then only a small number of probable candidate locations are chosen where finer features (primarily using edge pixels) are computed. The coarse match candidates are then refined using these fine features and a revised list of matches is obtained.

Matching under rotation also follows a similar paradigm to pyramidal matching. Intelligent sampling allows us to compactly represent template samples corresponding to different angles. Initially, we find matches using coarse sampling and at a coarse angle step size. The best coarse angle match locations are then refined at a finer angle step size and using finer features.

Limitations

Low discrepancy sampling extracts the most significant information to represent an image. While this leads to a very sparse and efficient representation in most cases, certain types of images are known to cause problems:

- Templates containing large regions of similar grayscale information, with very little information, can sometimes exhibit inconsistent behavior due to a low number of sample points.

- Templates with skewed or long aspect ratios (1:6) may suffer from inconsistent results when searching for rotated matches.

- Very small templates are sometimes found to contain an insufficient number of samples for reliable training.

If these limitations negatively impact the performance of your application, use a pyramidal matching method.

Pyramid Pre-Processing

Pyramid Pattern Matching provides three types of Pre-Processing options:

- Sobel and Log

- Sobel

- Non-Linear Diffusion Filter

Sobel and Log

This filter applies Sobel kernel convolution on template and match image pyramid. Use this option to enhance the low contrast region and to consider only the edge features from the template.

| Original Image | After Sobel and Log |

|

|

Sobel

This filter applies Sobel kernel convolution on template and match image pyramid. Use this filter to use only the edge feature from the template.

| Original Image | After Sobel |

|

|

Non-Linear Diffusion Filter

This filter applies an anisotropic diffusion filter on the template and match image pyramid. Use this filter to reduce noise and to enhance the edge contrast. The following images illustrate that the Non-Linear Diffusion filter can reduce the noise without sacrificing edge contrast.

| Original Image | Gaussian Pyramid at Level 2 | Non-Linear Diffusion Pyramid at level 2 |

|

|

|

Visit the Anisotropic wiki page for more reference.

Presets

Presets provide an easy and descriptive method to set advanced parameters for different Pattern Matching algorithms. Pattern Matching algorithms have advanced parameter settings which fine tune the algorithm to perform improved matching with different match requirements. Presets are the set of advanced parameter values which have been tested to work with a broad range of match requirements.

A particular Preset (set of advanced parameter values) will be stored in a template image based on the chosen requirement. The values will be used automatically during matching. Hence, the matching results would be improved over the default results without having to understand the advanced parameters and their implications for matching. The Presets stored in the template is based on the selection of Use-Case and Priority. These inputs should be selected based on the match requirement.

The following options are provided in Use-Case:

| Preset Option | Description |

| Overlapping | Uses Advanced Options appropriate for match image that contain overlapping objects. |

| Overlapping | Uses Advanced Options appropriate for match image that contain overlapping objects. |

| Low Contrast | Uses Advanced Options appropriate for templates with large dimensions. |

| Screenshot | Uses advanced options appropriate for screenshots or screen captures that are pixel accurate in resolution (they are not captured via a camera) and usually have templates with small dimensions. The match algorithm used should be Grayscale Value Pyramid or Low Discrepancy Sampling when the Screenshot Preset is selected. |

The following options are provided in Priority:

| Preset Option | Description | |

| Accurate | Uses Advanced Options that give a higher priority to Match Accuracy than Match Speed. | |

| Fast | Uses Advanced Options that gives equal priority to Match Accuracy and Match Speed. | |

| Very Fast |

|

The workflow for Presets with various Algorithms is depicted in the following images.

| Workflow for Low Discrepancy, Grayscale, and Gradient Pyramid Algorithms |

|

| Workflow for Geometric Pattern Matching |

|

Sub-pixel Refinement

In both pyramidal as well as low-discrepancy sampling-based pattern matching, the user can choose to subject the refined match candidates to one last stage of refinement to find sub-pixel accurate locations and sub-degree accurate angles. This stage relies on specially-extracted edge and pixel information from the template and employs interpolation techniques to get a highly accurate match location and angle.

Once the refined locations (with or without sub-pixel refinement) are obtained, both pattern matching methods do a final and accurate score computation using most of the significant information present in the template.