How to Select the Right Approach to Saving Measurement Data

Overview

Your company invests thousands, possibly even millions of dollars into equipment for collecting data because data holds the key to product innovation. For each trend that you identify from your data, you can potentially deliver a new feature or product to market quicker to capture new market share in a fiercely competitive and global environment. However, quickly going from raw data to meaningful results is a challenge. In fact, according to a survey conducted by National Instruments, data is the most difficult aspect of working with current software tools. According to engineers and scientists, working with data is more difficult than maintaining legacy code or programming a completely new data acquisition system.

Now to be fair, working with data is a broad topic that encompasses many different aspects. The problems you face come from all phases of designing your measurement application and include several concerns. How much data should you collect during your test run? What file format will be the best to use? What will you do with the data after you have collected it? However, for many new measurement systems, choosing the right data storage approach and addressing these important issues is an afterthought. Engineers and scientists often end up selecting the storage strategy that most easily meets the needs of the application in its current state without considering future requirements. Yet storage format choices can have a large impact on the overall efficiency of the acquisition system as well as the efficiency of post-processing the raw data over time.

Managing and post-processing data becomes especially problematic when you consider that we are collecting data at a rate that mimics Moore’s law. Thanks to increasing microprocessor speed and storage capacities, the cost of data storage is decreasing exponentially and the world is generating enough data to double the entire catalog of data approximately every two years.

Choosing the right approach that is flexible enough to adapt to your data needs in a forever evolving digital world is not an easy task. This article suggests some helpful tips to help you get started and properly manage data for your application.

Contents

- Select an Appropriate File Format

- Stream Data to a File Efficiently

- Analyze and Report Your Results

- Ensure Your Application’s Success With the Right Data Saving Strategies

- Additional Resources

Select an Appropriate File Format

The first step to achieving a cohesive data management solution is ensuring that data is stored in the most efficient, organized, and scalable fashion. All too often data is stored without descriptive information, in inconsistent formats, and scattered about on arrays of computers, which creates a graveyard of information that makes it extremely difficult to locate a particular data set and derive decisions from it.

Depending on the application, you may prioritize certain characteristics over others. Common storage formats such as ASCII, binary, and XML have strengths and weaknesses in different areas.

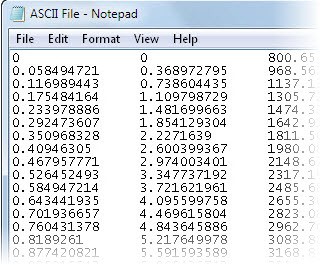

ASCII Files

Many engineers prefer to store data using ASCII (American Standard Code for Information Interchange) files because of the format’s easy exchangeability and human readability. However, ASCII files have several drawbacks, including a large disk footprint, which can be an issue when storage space is limited (for example, storing data on a distributed system). Reading and writing data from an ASCII file can be significantly slower compared to other formats and in many cases, the write speed of an ASCII file cannot keep up with the speeds of acquisition systems, which can lead to data loss.

| Figure 1. ASCII files are easy to exchange but can be too slow and large for many applications. |

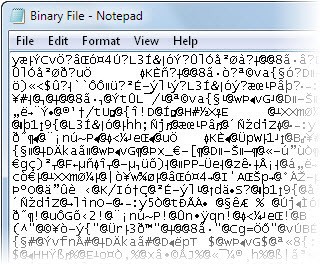

Binary Files

| Another typical storage approach that is somewhat on the opposite end of the spectrum from ASCII is binary files. In contrast to ASCII files, binary files feature a significantly smaller disk footprint and can be streamed to disk at extremely high speeds, making them ideal for high-channel-count and real-time applications. A drawback to using binary is its unreadable format that complicates exchangeability between users. Binary files cannot be immediately opened by common software; they have to be interpreted by an application or program. Different applications may interpret binary data in different ways, which causes confusion. One application may read the binary values as textual characters while another may interpret the values as colors. To share the files with colleagues, you must provide them with an application that interprets your specific binary file correctly. Also, if you make changes to how the data is written in the acquisition application, these changes must also be reflected within the application that is reading data. This can potentially cause long-term application versioning issues and headaches that can ultimately result in lost data. |

Figure 2. Binary files are beneficial in high-speed, limited-space applications but can cause exchangeability issues.

|

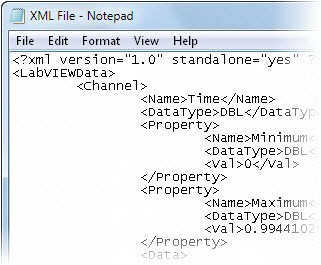

XML Files

| Over the last several years, the XML format has gained popularity due to its ability to store complex data structures. With XML files, you can store data and formatting along with the raw measurement values. Using the flexibility of the XML format, you can store additional information with the data in a structured manner. XML is also relatively human-readable and exchangeable. Similar to ASCII, XML files can be opened in many common text editors as well as XML-capable Internet browsers, such as Microsoft Internet Explorer. However, in its raw form, XML includes tags within the file that describe the structures. These tags also appear when XML files are opened in these applications, which somewhat limits the readability because you must be able to understand these tags. The weakness of the XML file format is that it has an extremely large disk footprint compared to other files and cannot be used to stream data directly to disk. Also, a downside to being able to store these complex structures is that they may require considerable planning when you design the layout, or schema, of the XML structures. | Figure 3. XML files can help define complex structures but are significantly larger and slower than other formats. |

Database Files

Database files are composed of a series of tables, built using columns and rows, and information may or may not be linked between tables. Searchability makes database files advantageous, however they can be impractical for time-based measurement applications given the amount of data acquired and the need to either purchase or build a formal database solution from scratch. Time-based measurements cause databases to become bloated, which slows down the query returns, defeating the purpose of databases in the first place.

TDMS Files

Technical Data Management Streaming (TDMS) is a binary-based file format, so it has a small disk footprint and can stream data to disk at high speeds. At the same time, TDMS files contain a header component that stores descriptive information, or attributes, with the data. Some attributes such as file name, date, and file path are stored automatically; however, you can easily add your own custom attributes as well. Another advantage of the TDMS file format is the built-in three-level hierarchy: file, group, and channel levels. A TDMS file can contain an unlimited number of groups, and each group can contain an unlimited number of channels. You can add attributes at each of these levels describing and documenting your test data for better understanding. This hierarchy creates an inherent organization of your test data.

Table 1. The TDMS file format combines the benefits of several data storage options in one file format.

Stream Data to a File Efficiently

The more frequently you write data to the file, the greater the chances that the processor cannot keep up. To combat this, you need to architect your program to take advantage of the available onboard memory that you have by creating a temporary buffer. You can then periodically empty the buffer by streaming all of the data to disk at once in a larger chunk, a process sometimes referred to as flushing the buffer.

With this approach, you can minimize processor time by saving the data periodically in chunks. For example, acquiring at 60 kB/s and trying to save every point to file individually is not an efficient use of processor resources. Instead, if you set up a 10 kB FIFO buffer in onboard memory, then you only have to flush the buffer every 16 ms to keep up with the acquisition. Using this approach allows the processor free time between writes to handle other tasks.

Manage Hard Drive Space

Choosing the correct file format is also a crucial part of streaming data efficiently. The large memory footprint required by ASCII makes it less suited for applications that require inline data saving. In ASCII, each character takes up eight bits (one byte) of system memory. So the number 123456789 requires nine bytes of memory. In binary and TDMS the entire number is represented as a series of ones and zeros. So in this case the number 123456789 is represented as 111010110111100110100010101, which only requires 27 bits (about four bytes) of memory.

A difference of five bytes may seem insignificant, but if you extrapolate out and consider a file that contains 100,000 nine-digit numbers, an ASCII file will be 1.04 MB while a binary/TDMS file will only take up 390 kB for the same set of data. This is a significant savings in hard drive space considering that one MB is still relatively small for a data file.

Analyze and Report Your Results

A growing concern when choosing data analysis and reporting tools is the size and speed of the data it can process. You are collecting more data from more places faster than ever before. If the data analysis and reporting tools you use daily can’t keep up with these new trends or read in the data file you saved, then you have more data than ever but nothing to effectively analyze it with. Data analysis and reporting tools that were created for financial analysis are not proper for data acquisition and cause many frustrating limitations. If you are trying to manipulate or correlate large data sets, then it would be beneficial to use analysis and reporting tools that are built for large data sets. Without proper data analysis, you will see that performing any analysis and generating reports to share results is time-consuming, or you may not be able to analyze or report at all due to the sheer volume of data.

Ensure Your Application’s Success With the Right Data Saving Strategies

The process of saving measurement data involves many complex considerations that are vital to the success of your measurement application. Failing to choose the right data saving strategies can cause memory overflow, processor overload, and unusable or meaningless data files. To avoid this, you need to correctly anticipate your application’s memory needs and make informed decisions about the method you use to save data, the file format you use, the way you organize your data in files, and the type of system that is most ideal for you to run your application on.

Additional Resources

- Learn more about DIAdem to help make knowledge driven decisions from your raw data.

- Learn more about the Technical Data Management Streaming (TDMS) file structure

| ASCII | Binary | XML | Database | TDMS | |

|---|---|---|---|---|---|

| Exchangeable | |||||

| Small Disk Footprint | |||||

| Searchable | |||||

| Inherent Attributes | |||||

| High-Speed Streaming | |||||

| NI Platform Supported | * |

* May require a toolkit or add-on module.